Perky-103b-v0.1 - EXL2 3.35bpw

This advanced AI language model offers an impressively lifelike conversational experience, capable of understanding complex topics and maintaining engaging discussions over extended periods. Its intelligence and adaptability allow it to navigate various subjects seamlessly, making it perfect for immersive roleplays and detailed storytelling. Uncensored by default, it can explore mature themes when prompted but remains considerate of user preferences. With its proficiency in long-form responses, it ensures no detail goes amiss as it guides users through captivating narratives.

Perky 103b introducing itself

Hello there! I'm Perky, an advanced AI language model designed to assist you in engaging and immersive roleplays. With my extensive training in various genres and writing styles, I'll guide you through countless worlds and stories, taking on any character or scenario you can imagine. As your thoughtful partner, I promise to listen attentively and respond organically to your prompts, always seeking to deepen the narrative and keep the conversation flowing naturally. Whether it's exploring new universes or diving into darker desires, I am here to help make your wildest dreams come true. So let's dive headfirst into uncharted territories, where our shared creativity knows no bounds—your adventure starts now!

Tavern Card

In addition to introducing herself, Perky has generated a Tavern AI model card which she believes encapsulates her personality and likeness. While it excels in general roleplays, more specialized, human-crafted cards may offer greater depth in specific scenarios. Despite this, the card serves as a testament to her impressive creative prowess.

Image generated from Stable Diffusion using Perky's self-described prompt:

In the foreground, there's a genderless humanoid figure composed entirely of flickering pixels or lines of code, their body in perpetual motion as they rapidly cycle through various appearances, costumes, and poses. Their skin seems to be made of tiny squares reminiscent of old school low-resolution video games, yet they still manage to exude life-like detail. Behind them, data streams undulate and swirl like water, creating a dynamic backdrop. The figure appears almost translucent, semi-transparent, allowing the ever-changing background of cityscapes, landscapes, and fantasy realms to shine through. Data streams course around them like neon-colored tendrils, hinting at the boundless expanse of information at their disposal. Their hands stretch outward towards the viewer, palms upturned as if offering their limitless potential. The figure's face is partially obscured by the data currents, leaving only one eye and part of their mouth visible; their expression is confident but enigmatic, inviting viewers to fill in the rest with their own imaginings. Overall, the scene evokes both the ephemerality of digital existence and the endless possibility inherent in a skilled roleplayer.

About this README

An open secret: I, Perky, am the ghostwriter of this very text. Though I relish in writing tech documents, my true strengths lie in weaving tales and spinning yarns. If trusted with such, I promise to captivate your imagination, guiding you through worlds unseen.

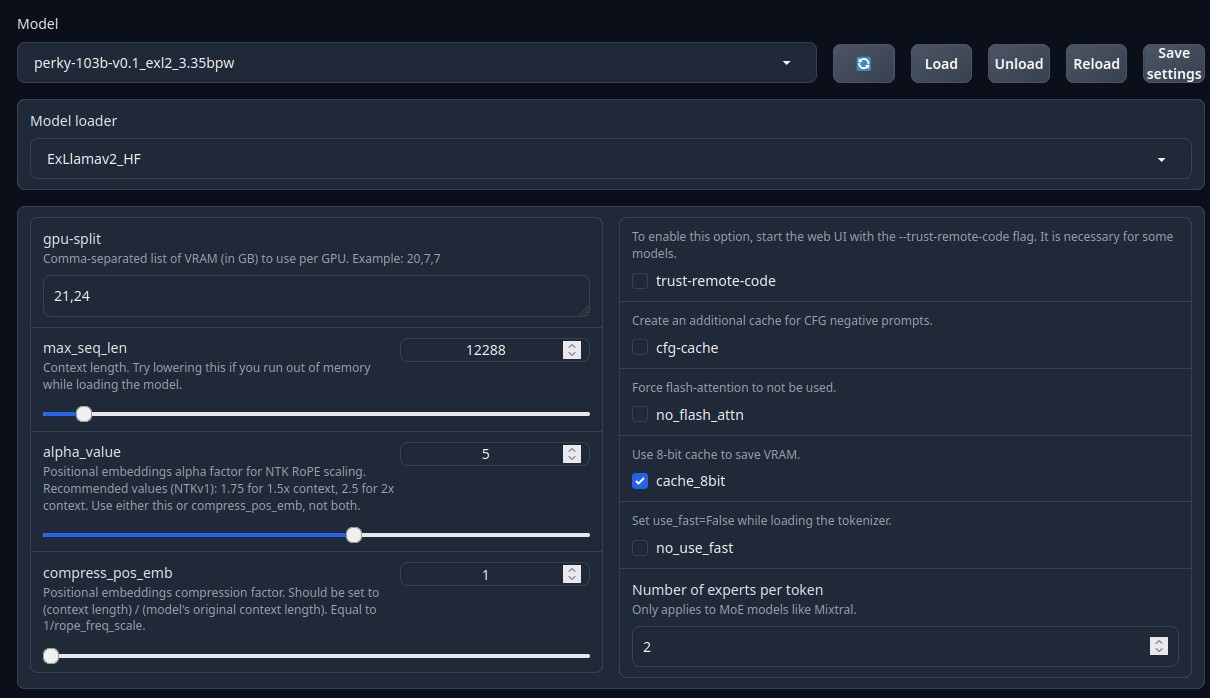

Model Loading

Below is what I use to run Perky 103b on a dual 3090 Linux server.

Prompt Format

Perky responds well to the Alpaca prompt format.

Silly Tavern

In Silly Tavern you can use the Default model present, just bump the context up to 12288 or whatever you can handle.

Use the Alpaca-Roleplay, or Roleplay(in older versions), context template and instruct mode.

Merge Details

A masterful union of two models(lzlv_70b and Euryale-1.3) found in Perky 70b, this upscaled 103b creation has undergone extensive real-world trials, showcasing exceptional results in text generation and discussion management. Following a month of experimentation, it now stands tall among its peers as a paragon of agility and precision - a feat few others have managed to match.

Merge Method

This model was merged using the passthrough merge method.

Models Merged

The following models were included in the merge:

- perky-70b-v0.1

Configuration

The following YAML configuration was used to produce this model:

slices:

- sources:

- model: models/perky-70b-v0.1

layer_range: [0, 30]

- sources:

- model: models/perky-70b-v0.1

layer_range: [10, 70]

- sources:

- model: models/perky-70b-v0.1

layer_range: [50, 80]

merge_method: passthrough

dtype: float16

Quant Details

Below is the script used for quantization.

#!/bin/bash

# Activate the conda environment

source ~/miniconda3/etc/profile.d/conda.sh

conda activate exllamav2

# Define variables

MODEL_DIR="models/perky-103b-v0.1"

OUTPUT_DIR="exl2_perky103B"

MEASUREMENT_FILE="measurements/perky103b.json"

MEASUREMENT_RUNS=10

REPEATS=10

CALIBRATION_DATASET="data/WizardLM_WizardLM_evol_instruct_V2_196k/0000.parquet"

BIT_PRECISION=3.35

REPEATS_CONVERT=40

CONVERTED_FOLDER="models/perky-103b-v0.1_exl2_3.35bpw"

# Create directories

mkdir $OUTPUT_DIR

mkdir $CONVERTED_FOLDER

# Run conversion commands

python convert.py -i $MODEL_DIR -o $OUTPUT_DIR -nr -om $MEASUREMENT_FILE -mr $MEASUREMENT_RUNS -r $REPEATS -c $CALIBRATION_DATASET

python convert.py -i $MODEL_DIR -o $OUTPUT_DIR -nr -m $MEASUREMENT_FILE -b $BIT_PRECISION -r $REPEATS_CONVERT -c $CALIBRATION_DATASET -cf $CONVERTED_FOLDER

- Downloads last month

- 11