Commit

•

656b5ab

1

Parent(s):

1351f60

Upload folder using huggingface_hub

Browse files- .DS_Store +0 -0

- .gitattributes +5 -0

- .gitignore +157 -0

- README.md +19 -2

- dataset/training/gt/p_00a4eda7.png +0 -0

- dataset/training/gt/p_00a5b702.png +0 -0

- dataset/training/im/p_00a4eda7.png +3 -0

- dataset/training/im/p_00a5b702.png +3 -0

- dataset/validation/gt/p_00a7a27c.png +0 -0

- dataset/validation/im/p_00a7a27c.png +3 -0

- environment.yaml +199 -0

- examples/.DS_Store +0 -0

- examples/image/image01.png +3 -0

- examples/image/image01_no_background.png +3 -0

- examples/loss/gt.png +0 -0

- examples/loss/loss01.png +0 -0

- examples/loss/loss02.png +0 -0

- examples/loss/loss03.png +0 -0

- examples/loss/loss04.png +0 -0

- examples/loss/loss05.png +0 -0

- examples/loss/orginal.jpg +0 -0

- ormbg/.DS_Store +0 -0

- ormbg/basics.py +79 -0

- ormbg/data_loader_cache.py +489 -0

- ormbg/inference.py +110 -0

- ormbg/models/ormbg.py +484 -0

- ormbg/train_model.py +474 -0

- utils/.DS_Store +0 -0

- utils/architecture.py +4 -0

- utils/loss_example.py +69 -0

- utils/pth_to_onnx.py +3 -3

.DS_Store

CHANGED

|

Binary files a/.DS_Store and b/.DS_Store differ

|

|

|

.gitattributes

CHANGED

|

@@ -38,3 +38,8 @@ no-background.png filter=lfs diff=lfs merge=lfs -text

|

|

| 38 |

examples/example1.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

examples/no-background1.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

examples.jpg filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 38 |

examples/example1.png filter=lfs diff=lfs merge=lfs -text

|

| 39 |

examples/no-background1.png filter=lfs diff=lfs merge=lfs -text

|

| 40 |

examples.jpg filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

dataset/training/im/p_00a4eda7.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

dataset/training/im/p_00a5b702.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

dataset/validation/im/p_00a7a27c.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

examples/image/image01.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

examples/image/image01_no_background.png filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,157 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

share/python-wheels/

|

| 24 |

+

*.egg-info/

|

| 25 |

+

.installed.cfg

|

| 26 |

+

*.egg

|

| 27 |

+

MANIFEST

|

| 28 |

+

|

| 29 |

+

# PyInstaller

|

| 30 |

+

# Usually these files are written by a python script from a template

|

| 31 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 32 |

+

*.manifest

|

| 33 |

+

*.spec

|

| 34 |

+

|

| 35 |

+

# Installer logs

|

| 36 |

+

pip-log.txt

|

| 37 |

+

pip-delete-this-directory.txt

|

| 38 |

+

|

| 39 |

+

# Unit test / coverage reports

|

| 40 |

+

htmlcov/

|

| 41 |

+

.tox/

|

| 42 |

+

.nox/

|

| 43 |

+

.coverage

|

| 44 |

+

.coverage.*

|

| 45 |

+

.cache

|

| 46 |

+

nosetests.xml

|

| 47 |

+

coverage.xml

|

| 48 |

+

*.cover

|

| 49 |

+

*.py,cover

|

| 50 |

+

.hypothesis/

|

| 51 |

+

.pytest_cache/

|

| 52 |

+

cover/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

.pybuilder/

|

| 76 |

+

target/

|

| 77 |

+

|

| 78 |

+

# Jupyter Notebook

|

| 79 |

+

.ipynb_checkpoints

|

| 80 |

+

|

| 81 |

+

# IPython

|

| 82 |

+

profile_default/

|

| 83 |

+

ipython_config.py

|

| 84 |

+

|

| 85 |

+

# pyenv

|

| 86 |

+

# For a library or package, you might want to ignore these files since the code is

|

| 87 |

+

# intended to run in multiple environments; otherwise, check them in:

|

| 88 |

+

# .python-version

|

| 89 |

+

|

| 90 |

+

# pipenv

|

| 91 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 92 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 93 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 94 |

+

# install all needed dependencies.

|

| 95 |

+

#Pipfile.lock

|

| 96 |

+

|

| 97 |

+

# poetry

|

| 98 |

+

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

| 99 |

+

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

| 100 |

+

# commonly ignored for libraries.

|

| 101 |

+

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

| 102 |

+

#poetry.lock

|

| 103 |

+

|

| 104 |

+

# pdm

|

| 105 |

+

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

| 106 |

+

#pdm.lock

|

| 107 |

+

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

| 108 |

+

# in version control.

|

| 109 |

+

# https://pdm.fming.dev/latest/usage/project/#working-with-version-control

|

| 110 |

+

.pdm.toml

|

| 111 |

+

.pdm-python

|

| 112 |

+

.pdm-build/

|

| 113 |

+

|

| 114 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

| 115 |

+

__pypackages__/

|

| 116 |

+

|

| 117 |

+

# Celery stuff

|

| 118 |

+

celerybeat-schedule

|

| 119 |

+

celerybeat.pid

|

| 120 |

+

|

| 121 |

+

# SageMath parsed files

|

| 122 |

+

*.sage.py

|

| 123 |

+

|

| 124 |

+

# Environments

|

| 125 |

+

.env

|

| 126 |

+

.venv

|

| 127 |

+

env/

|

| 128 |

+

venv/

|

| 129 |

+

ENV/

|

| 130 |

+

env.bak/

|

| 131 |

+

venv.bak/

|

| 132 |

+

|

| 133 |

+

# Spyder project settings

|

| 134 |

+

.spyderproject

|

| 135 |

+

.spyproject

|

| 136 |

+

|

| 137 |

+

# Rope project settings

|

| 138 |

+

.ropeproject

|

| 139 |

+

|

| 140 |

+

# mkdocs documentation

|

| 141 |

+

/site

|

| 142 |

+

|

| 143 |

+

# mypy

|

| 144 |

+

.mypy_cache/

|

| 145 |

+

.dmypy.json

|

| 146 |

+

dmypy.json

|

| 147 |

+

|

| 148 |

+

# Pyre type checker

|

| 149 |

+

.pyre/

|

| 150 |

+

|

| 151 |

+

# pytype static type analyzer

|

| 152 |

+

.pytype/

|

| 153 |

+

|

| 154 |

+

# Cython debug symbols

|

| 155 |

+

cython_debug/

|

| 156 |

+

|

| 157 |

+

models/*

|

README.md

CHANGED

|

@@ -15,7 +15,9 @@ datasets:

|

|

| 15 |

|

| 16 |

[>>> DEMO <<<](https://huggingface.co/spaces/schirrmacher/ormbg)

|

| 17 |

|

| 18 |

-

|

|

|

|

|

|

|

| 19 |

|

| 20 |

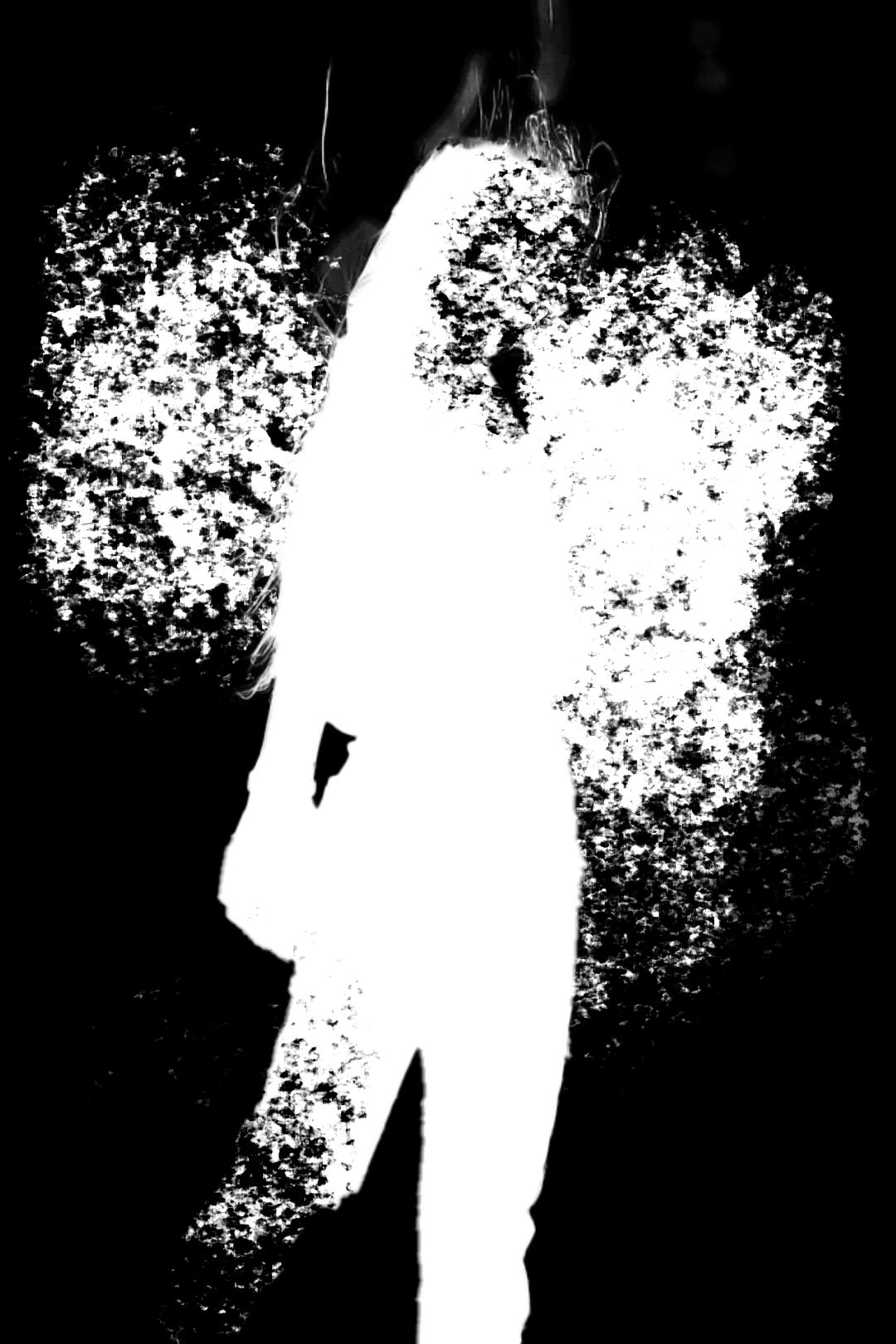

This model is a **fully open-source background remover** optimized for images with humans. It is based on [Highly Accurate Dichotomous Image Segmentation research](https://github.com/xuebinqin/DIS). The model was trained with the synthetic [Human Segmentation Dataset](https://huggingface.co/datasets/schirrmacher/humans), [P3M-10k](https://paperswithcode.com/dataset/p3m-10k) and [AIM-500](https://paperswithcode.com/dataset/aim-500).

|

| 21 |

|

|

@@ -24,7 +26,22 @@ This model is similar to [RMBG-1.4](https://huggingface.co/briaai/RMBG-1.4), but

|

|

| 24 |

## Inference

|

| 25 |

|

| 26 |

```

|

| 27 |

-

python

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 28 |

```

|

| 29 |

|

| 30 |

# Research

|

|

|

|

| 15 |

|

| 16 |

[>>> DEMO <<<](https://huggingface.co/spaces/schirrmacher/ormbg)

|

| 17 |

|

| 18 |

+

Join our [Research Discord Group](https://discord.gg/YYZ3D66t)!

|

| 19 |

+

|

| 20 |

+

|

| 21 |

|

| 22 |

This model is a **fully open-source background remover** optimized for images with humans. It is based on [Highly Accurate Dichotomous Image Segmentation research](https://github.com/xuebinqin/DIS). The model was trained with the synthetic [Human Segmentation Dataset](https://huggingface.co/datasets/schirrmacher/humans), [P3M-10k](https://paperswithcode.com/dataset/p3m-10k) and [AIM-500](https://paperswithcode.com/dataset/aim-500).

|

| 23 |

|

|

|

|

| 26 |

## Inference

|

| 27 |

|

| 28 |

```

|

| 29 |

+

python ormbg/inference.py

|

| 30 |

+

```

|

| 31 |

+

|

| 32 |

+

## Training

|

| 33 |

+

|

| 34 |

+

Install dependencies:

|

| 35 |

+

|

| 36 |

+

```

|

| 37 |

+

conda env create -f environment.yaml

|

| 38 |

+

conda activate ormbg

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

Replace dummy dataset with (training dataset)[https://huggingface.co/datasets/schirrmacher/humans].

|

| 42 |

+

|

| 43 |

+

```

|

| 44 |

+

python3 ormbg/train_model.py

|

| 45 |

```

|

| 46 |

|

| 47 |

# Research

|

dataset/training/gt/p_00a4eda7.png

ADDED

|

dataset/training/gt/p_00a5b702.png

ADDED

|

dataset/training/im/p_00a4eda7.png

ADDED

|

Git LFS Details

|

dataset/training/im/p_00a5b702.png

ADDED

|

Git LFS Details

|

dataset/validation/gt/p_00a7a27c.png

ADDED

|

dataset/validation/im/p_00a7a27c.png

ADDED

|

Git LFS Details

|

environment.yaml

ADDED

|

@@ -0,0 +1,199 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: ormbg

|

| 2 |

+

channels:

|

| 3 |

+

- pytorch

|

| 4 |

+

- nvidia

|

| 5 |

+

- anaconda

|

| 6 |

+

- defaults

|

| 7 |

+

dependencies:

|

| 8 |

+

- _libgcc_mutex=0.1=main

|

| 9 |

+

- _openmp_mutex=5.1=1_gnu

|

| 10 |

+

- aom=3.6.0=h6a678d5_0

|

| 11 |

+

- blas=1.0=mkl

|

| 12 |

+

- blosc=1.21.3=h6a678d5_0

|

| 13 |

+

- brotli=1.0.9=h5eee18b_7

|

| 14 |

+

- brotli-bin=1.0.9=h5eee18b_7

|

| 15 |

+

- brotli-python=1.0.9=py38h6a678d5_7

|

| 16 |

+

- brunsli=0.1=h2531618_0

|

| 17 |

+

- bzip2=1.0.8=h7b6447c_0

|

| 18 |

+

- c-ares=1.19.1=h5eee18b_0

|

| 19 |

+

- ca-certificates=2023.08.22=h06a4308_0

|

| 20 |

+

- certifi=2023.7.22=py38h06a4308_0

|

| 21 |

+

- cffi=1.15.0=py38h7f8727e_0

|

| 22 |

+

- cfitsio=3.470=h5893167_7

|

| 23 |

+

- charls=2.2.0=h2531618_0

|

| 24 |

+

- charset-normalizer=2.0.4=pyhd3eb1b0_0

|

| 25 |

+

- click=8.1.7=py38h06a4308_0

|

| 26 |

+

- cloudpickle=2.2.1=py38h06a4308_0

|

| 27 |

+

- contourpy=1.0.5=py38hdb19cb5_0

|

| 28 |

+

- cryptography=41.0.3=py38h130f0dd_0

|

| 29 |

+

- cuda-cudart=11.8.89=0

|

| 30 |

+

- cuda-cupti=11.8.87=0

|

| 31 |

+

- cuda-libraries=11.8.0=0

|

| 32 |

+

- cuda-nvrtc=11.8.89=0

|

| 33 |

+

- cuda-nvtx=11.8.86=0

|

| 34 |

+

- cuda-runtime=11.8.0=0

|

| 35 |

+

- cudatoolkit=11.8.0=h6a678d5_0

|

| 36 |

+

- cycler=0.11.0=pyhd3eb1b0_0

|

| 37 |

+

- cytoolz=0.12.0=py38h5eee18b_0

|

| 38 |

+

- dask-core=2023.4.1=py38h06a4308_0

|

| 39 |

+

- dav1d=1.2.1=h5eee18b_0

|

| 40 |

+

- dbus=1.13.18=hb2f20db_0

|

| 41 |

+

- expat=2.5.0=h6a678d5_0

|

| 42 |

+

- ffmpeg=4.3=hf484d3e_0

|

| 43 |

+

- fftw=3.3.9=h27cfd23_1

|

| 44 |

+

- filelock=3.9.0=py38h06a4308_0

|

| 45 |

+

- fontconfig=2.14.1=h52c9d5c_1

|

| 46 |

+

- fonttools=4.25.0=pyhd3eb1b0_0

|

| 47 |

+

- freetype=2.12.1=h4a9f257_0

|

| 48 |

+

- fsspec=2023.9.2=py38h06a4308_0

|

| 49 |

+

- giflib=5.2.1=h5eee18b_3

|

| 50 |

+

- glib=2.63.1=h5a9c865_0

|

| 51 |

+

- gmp=6.2.1=h295c915_3

|

| 52 |

+

- gmpy2=2.1.2=py38heeb90bb_0

|

| 53 |

+

- gnutls=3.6.15=he1e5248_0

|

| 54 |

+

- gst-plugins-base=1.14.0=hbbd80ab_1

|

| 55 |

+

- gstreamer=1.14.0=hb453b48_1

|

| 56 |

+

- icu=58.2=he6710b0_3

|

| 57 |

+

- idna=3.4=py38h06a4308_0

|

| 58 |

+

- imagecodecs=2023.1.23=py38hc4b7b5f_0

|

| 59 |

+

- imageio=2.31.4=py38h06a4308_0

|

| 60 |

+

- importlib-metadata=6.0.0=py38h06a4308_0

|

| 61 |

+

- importlib_resources=6.1.0=py38h06a4308_0

|

| 62 |

+

- intel-openmp=2021.4.0=h06a4308_3561

|

| 63 |

+

- jinja2=3.1.2=py38h06a4308_0

|

| 64 |

+

- jpeg=9e=h5eee18b_1

|

| 65 |

+

- jxrlib=1.1=h7b6447c_2

|

| 66 |

+

- kiwisolver=1.4.4=py38h6a678d5_0

|

| 67 |

+

- krb5=1.20.1=h568e23c_1

|

| 68 |

+

- lame=3.100=h7b6447c_0

|

| 69 |

+

- lazy_loader=0.3=py38h06a4308_0

|

| 70 |

+

- lcms2=2.12=h3be6417_0

|

| 71 |

+

- lerc=3.0=h295c915_0

|

| 72 |

+

- libaec=1.0.4=he6710b0_1

|

| 73 |

+

- libavif=0.11.1=h5eee18b_0

|

| 74 |

+

- libbrotlicommon=1.0.9=h5eee18b_7

|

| 75 |

+

- libbrotlidec=1.0.9=h5eee18b_7

|

| 76 |

+

- libbrotlienc=1.0.9=h5eee18b_7

|

| 77 |

+

- libcublas=11.11.3.6=0

|

| 78 |

+

- libcufft=10.9.0.58=0

|

| 79 |

+

- libcufile=1.8.1.2=0

|

| 80 |

+

- libcurand=10.3.4.101=0

|

| 81 |

+

- libcurl=7.88.1=h91b91d3_2

|

| 82 |

+

- libcusolver=11.4.1.48=0

|

| 83 |

+

- libcusparse=11.7.5.86=0

|

| 84 |

+

- libdeflate=1.17=h5eee18b_1

|

| 85 |

+

- libedit=3.1.20221030=h5eee18b_0

|

| 86 |

+

- libev=4.33=h7f8727e_1

|

| 87 |

+

- libffi=3.2.1=hf484d3e_1007

|

| 88 |

+

- libgcc-ng=11.2.0=h1234567_1

|

| 89 |

+

- libgfortran-ng=11.2.0=h00389a5_1

|

| 90 |

+

- libgfortran5=11.2.0=h1234567_1

|

| 91 |

+

- libgomp=11.2.0=h1234567_1

|

| 92 |

+

- libiconv=1.16=h7f8727e_2

|

| 93 |

+

- libidn2=2.3.4=h5eee18b_0

|

| 94 |

+

- libjpeg-turbo=2.0.0=h9bf148f_0

|

| 95 |

+

- libnghttp2=1.52.0=ha637b67_1

|

| 96 |

+

- libnpp=11.8.0.86=0

|

| 97 |

+

- libnvjpeg=11.9.0.86=0

|

| 98 |

+

- libpng=1.6.39=h5eee18b_0

|

| 99 |

+

- libssh2=1.10.0=h37d81fd_2

|

| 100 |

+

- libstdcxx-ng=11.2.0=h1234567_1

|

| 101 |

+

- libtasn1=4.19.0=h5eee18b_0

|

| 102 |

+

- libtiff=4.5.1=h6a678d5_0

|

| 103 |

+

- libunistring=0.9.10=h27cfd23_0

|

| 104 |

+

- libuuid=1.41.5=h5eee18b_0

|

| 105 |

+

- libwebp=1.3.2=h11a3e52_0

|

| 106 |

+

- libwebp-base=1.3.2=h5eee18b_0

|

| 107 |

+

- libxcb=1.15=h7f8727e_0

|

| 108 |

+

- libxml2=2.9.14=h74e7548_0

|

| 109 |

+

- libzopfli=1.0.3=he6710b0_0

|

| 110 |

+

- llvm-openmp=14.0.6=h9e868ea_0

|

| 111 |

+

- locket=1.0.0=py38h06a4308_0

|

| 112 |

+

- lz4-c=1.9.4=h6a678d5_0

|

| 113 |

+

- markupsafe=2.1.1=py38h7f8727e_0

|

| 114 |

+

- matplotlib=3.7.2=py38h06a4308_0

|

| 115 |

+

- matplotlib-base=3.7.2=py38h1128e8f_0

|

| 116 |

+

- mkl=2021.4.0=h06a4308_640

|

| 117 |

+

- mkl-service=2.4.0=py38h7f8727e_0

|

| 118 |

+

- mkl_fft=1.3.1=py38hd3c417c_0

|

| 119 |

+

- mkl_random=1.2.2=py38h51133e4_0

|

| 120 |

+

- mpc=1.1.0=h10f8cd9_1

|

| 121 |

+

- mpfr=4.0.2=hb69a4c5_1

|

| 122 |

+

- mpmath=1.3.0=py38h06a4308_0

|

| 123 |

+

- munkres=1.1.4=py_0

|

| 124 |

+

- ncurses=6.4=h6a678d5_0

|

| 125 |

+

- nettle=3.7.3=hbbd107a_1

|

| 126 |

+

- networkx=3.1=py38h06a4308_0

|

| 127 |

+

- openh264=2.1.1=h4ff587b_0

|

| 128 |

+

- openjpeg=2.4.0=h3ad879b_0

|

| 129 |

+

- openssl=1.1.1w=h7f8727e_0

|

| 130 |

+

- packaging=23.1=py38h06a4308_0

|

| 131 |

+

- partd=1.4.1=py38h06a4308_0

|

| 132 |

+

- pcre=8.45=h295c915_0

|

| 133 |

+

- pillow=10.0.1=py38ha6cbd5a_0

|

| 134 |

+

- pip=23.3=py38h06a4308_0

|

| 135 |

+

- pycparser=2.21=pyhd3eb1b0_0

|

| 136 |

+

- pyopenssl=23.2.0=py38h06a4308_0

|

| 137 |

+

- pyparsing=3.0.9=py38h06a4308_0

|

| 138 |

+

- pyqt=5.9.2=py38h05f1152_4

|

| 139 |

+

- pysocks=1.7.1=py38h06a4308_0

|

| 140 |

+

- python=3.8.0=h0371630_2

|

| 141 |

+

- python-dateutil=2.8.2=pyhd3eb1b0_0

|

| 142 |

+

- pytorch=2.1.1=py3.8_cuda11.8_cudnn8.7.0_0

|

| 143 |

+

- pytorch-cuda=11.8=h7e8668a_5

|

| 144 |

+

- pytorch-mutex=1.0=cuda

|

| 145 |

+

- pywavelets=1.4.1=py38h5eee18b_0

|

| 146 |

+

- pyyaml=6.0.1=py38h5eee18b_0

|

| 147 |

+

- qt=5.9.7=h5867ecd_1

|

| 148 |

+

- readline=7.0=h7b6447c_5

|

| 149 |

+

- requests=2.31.0=py38h06a4308_0

|

| 150 |

+

- setuptools=68.0.0=py38h06a4308_0

|

| 151 |

+

- sip=4.19.13=py38h295c915_0

|

| 152 |

+

- six=1.16.0=pyhd3eb1b0_1

|

| 153 |

+

- snappy=1.1.9=h295c915_0

|

| 154 |

+

- sqlite=3.33.0=h62c20be_0

|

| 155 |

+

- sympy=1.11.1=py38h06a4308_0

|

| 156 |

+

- tifffile=2023.4.12=py38h06a4308_0

|

| 157 |

+

- tk=8.6.12=h1ccaba5_0

|

| 158 |

+

- toolz=0.12.0=py38h06a4308_0

|

| 159 |

+

- torchaudio=2.1.1=py38_cu118

|

| 160 |

+

- torchtriton=2.1.0=py38

|

| 161 |

+

- torchvision=0.16.1=py38_cu118

|

| 162 |

+

- tornado=6.3.3=py38h5eee18b_0

|

| 163 |

+

- tqdm=4.65.0=py38hb070fc8_0

|

| 164 |

+

- urllib3=1.26.18=py38h06a4308_0

|

| 165 |

+

- wheel=0.41.2=py38h06a4308_0

|

| 166 |

+

- xz=5.4.2=h5eee18b_0

|

| 167 |

+

- yaml=0.2.5=h7b6447c_0

|

| 168 |

+

- zfp=1.0.0=h6a678d5_0

|

| 169 |

+

- zipp=3.11.0=py38h06a4308_0

|

| 170 |

+

- zlib=1.2.13=h5eee18b_0

|

| 171 |

+

- zstd=1.5.5=hc292b87_0

|

| 172 |

+

- pip:

|

| 173 |

+

- albucore==0.0.12

|

| 174 |

+

- albumentations==1.4.11

|

| 175 |

+

- annotated-types==0.7.0

|

| 176 |

+

- appdirs==1.4.4

|

| 177 |

+

- conda-pack==0.7.1

|

| 178 |

+

- docker-pycreds==0.4.0

|

| 179 |

+

- eval-type-backport==0.2.0

|

| 180 |

+

- gitdb==4.0.11

|

| 181 |

+

- gitpython==3.1.40

|

| 182 |

+

- joblib==1.4.2

|

| 183 |

+

- numpy==1.24.4

|

| 184 |

+

- opencv-python-headless==4.10.0.84

|

| 185 |

+

- protobuf==4.25.1

|

| 186 |

+

- psutil==5.9.6

|

| 187 |

+

- pydantic==2.8.2

|

| 188 |

+

- pydantic-core==2.20.1

|

| 189 |

+

- scikit-image==0.21.0

|

| 190 |

+

- scikit-learn==1.3.2

|

| 191 |

+

- scipy==1.10.1

|

| 192 |

+

- sentry-sdk==1.35.0

|

| 193 |

+

- setproctitle==1.3.3

|

| 194 |

+

- smmap==5.0.1

|

| 195 |

+

- threadpoolctl==3.5.0

|

| 196 |

+

- tomli==2.0.1

|

| 197 |

+

- typing-extensions==4.12.2

|

| 198 |

+

- wandb==0.16.0

|

| 199 |

+

prefix: /home/macher/miniconda3/envs/ormbg

|

examples/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

examples/image/image01.png

ADDED

|

Git LFS Details

|

examples/image/image01_no_background.png

ADDED

|

Git LFS Details

|

examples/loss/gt.png

ADDED

|

examples/loss/loss01.png

ADDED

|

examples/loss/loss02.png

ADDED

|

examples/loss/loss03.png

ADDED

|

examples/loss/loss04.png

ADDED

|

examples/loss/loss05.png

ADDED

|

examples/loss/orginal.jpg

ADDED

|

ormbg/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

ormbg/basics.py

ADDED

|

@@ -0,0 +1,79 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

|

| 3 |

+

# os.environ['CUDA_VISIBLE_DEVICES'] = '2'

|

| 4 |

+

from skimage import io, transform

|

| 5 |

+

import torch

|

| 6 |

+

import torchvision

|

| 7 |

+

from torch.autograd import Variable

|

| 8 |

+

import torch.nn as nn

|

| 9 |

+

import torch.nn.functional as F

|

| 10 |

+

from torch.utils.data import Dataset, DataLoader

|

| 11 |

+

from torchvision import transforms, utils

|

| 12 |

+

import torch.optim as optim

|

| 13 |

+

|

| 14 |

+

import matplotlib.pyplot as plt

|

| 15 |

+

import numpy as np

|

| 16 |

+

from PIL import Image

|

| 17 |

+

import glob

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def mae_torch(pred, gt):

|

| 21 |

+

|

| 22 |

+

h, w = gt.shape[0:2]

|

| 23 |

+

sumError = torch.sum(torch.absolute(torch.sub(pred.float(), gt.float())))

|

| 24 |

+

maeError = torch.divide(sumError, float(h) * float(w) * 255.0 + 1e-4)

|

| 25 |

+

|

| 26 |

+

return maeError

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

def f1score_torch(pd, gt):

|

| 30 |

+

|

| 31 |

+

# print(gt.shape)

|

| 32 |

+

gtNum = torch.sum((gt > 128).float() * 1) ## number of ground truth pixels

|

| 33 |

+

|

| 34 |

+

pp = pd[gt > 128]

|

| 35 |

+

nn = pd[gt <= 128]

|

| 36 |

+

|

| 37 |

+

pp_hist = torch.histc(pp, bins=255, min=0, max=255)

|

| 38 |

+

nn_hist = torch.histc(nn, bins=255, min=0, max=255)

|

| 39 |

+

|

| 40 |

+

pp_hist_flip = torch.flipud(pp_hist)

|

| 41 |

+

nn_hist_flip = torch.flipud(nn_hist)

|

| 42 |

+

|

| 43 |

+

pp_hist_flip_cum = torch.cumsum(pp_hist_flip, dim=0)

|

| 44 |

+

nn_hist_flip_cum = torch.cumsum(nn_hist_flip, dim=0)

|

| 45 |

+

|

| 46 |

+

precision = (pp_hist_flip_cum) / (

|

| 47 |

+

pp_hist_flip_cum + nn_hist_flip_cum + 1e-4

|

| 48 |

+

) # torch.divide(pp_hist_flip_cum,torch.sum(torch.sum(pp_hist_flip_cum, nn_hist_flip_cum), 1e-4))

|

| 49 |

+

recall = (pp_hist_flip_cum) / (gtNum + 1e-4)

|

| 50 |

+

f1 = (1 + 0.3) * precision * recall / (0.3 * precision + recall + 1e-4)

|

| 51 |

+

|

| 52 |

+

return (

|

| 53 |

+

torch.reshape(precision, (1, precision.shape[0])),

|

| 54 |

+

torch.reshape(recall, (1, recall.shape[0])),

|

| 55 |

+

torch.reshape(f1, (1, f1.shape[0])),

|

| 56 |

+

)

|

| 57 |

+

|

| 58 |

+

|

| 59 |

+

def f1_mae_torch(pred, gt, valid_dataset, idx, mybins, hypar):

|

| 60 |

+

|

| 61 |

+

import time

|

| 62 |

+

|

| 63 |

+

tic = time.time()

|

| 64 |

+

|

| 65 |

+

if len(gt.shape) > 2:

|

| 66 |

+

gt = gt[:, :, 0]

|

| 67 |

+

|

| 68 |

+

pre, rec, f1 = f1score_torch(pred, gt)

|

| 69 |

+

mae = mae_torch(pred, gt)

|

| 70 |

+

|

| 71 |

+

print(valid_dataset.dataset["im_name"][idx] + ".png")

|

| 72 |

+

print("time for evaluation : ", time.time() - tic)

|

| 73 |

+

|

| 74 |

+

return (

|

| 75 |

+

pre.cpu().data.numpy(),

|

| 76 |

+

rec.cpu().data.numpy(),

|

| 77 |

+

f1.cpu().data.numpy(),

|

| 78 |

+

mae.cpu().data.numpy(),

|

| 79 |

+

)

|

ormbg/data_loader_cache.py

ADDED

|

@@ -0,0 +1,489 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## data loader

|

| 2 |

+

## Ackownledgement:

|

| 3 |

+

## We would like to thank Dr. Ibrahim Almakky (https://scholar.google.co.uk/citations?user=T9MTcK0AAAAJ&hl=en)

|

| 4 |

+

## for his helps in implementing cache machanism of our DIS dataloader.

|

| 5 |

+

from __future__ import print_function, division

|

| 6 |

+

|

| 7 |

+

import albumentations as A

|

| 8 |

+

import numpy as np

|

| 9 |

+

import random

|

| 10 |

+

from copy import deepcopy

|

| 11 |

+

import json

|

| 12 |

+

from tqdm import tqdm

|

| 13 |

+

from skimage import io

|

| 14 |

+

import os

|

| 15 |

+

from glob import glob

|

| 16 |

+

|

| 17 |

+

import torch

|

| 18 |

+

from torch.utils.data import Dataset, DataLoader

|

| 19 |

+

from torchvision import transforms

|

| 20 |

+

from torchvision.transforms.functional import normalize

|

| 21 |

+

import torch.nn.functional as F

|

| 22 |

+

|

| 23 |

+

#### --------------------- DIS dataloader cache ---------------------####

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def get_im_gt_name_dict(datasets, flag="valid"):

|

| 27 |

+

print("------------------------------", flag, "--------------------------------")

|

| 28 |

+

name_im_gt_list = []

|

| 29 |

+

for i in range(len(datasets)):

|

| 30 |

+

print(

|

| 31 |

+

"--->>>",

|

| 32 |

+

flag,

|

| 33 |

+

" dataset ",

|

| 34 |

+

i,

|

| 35 |

+

"/",

|

| 36 |

+

len(datasets),

|

| 37 |

+

" ",

|

| 38 |

+

datasets[i]["name"],

|

| 39 |

+

"<<<---",

|

| 40 |

+

)

|

| 41 |

+

tmp_im_list, tmp_gt_list = [], []

|

| 42 |

+

im_dir = datasets[i]["im_dir"]

|

| 43 |

+

gt_dir = datasets[i]["gt_dir"]

|

| 44 |

+

tmp_im_list = glob(os.path.join(im_dir, "*" + "*.[jp][pn]g"))

|

| 45 |

+

tmp_gt_list = glob(os.path.join(gt_dir, "*" + "*.[jp][pn]g"))

|

| 46 |

+

|

| 47 |

+

print(

|

| 48 |

+

"-im-", datasets[i]["name"], datasets[i]["im_dir"], ": ", len(tmp_im_list)

|

| 49 |

+

)

|

| 50 |

+

|

| 51 |

+

print(

|

| 52 |

+

"-gt-",

|

| 53 |

+

datasets[i]["name"],

|

| 54 |

+

datasets[i]["gt_dir"],

|

| 55 |

+

": ",

|

| 56 |

+

len(tmp_gt_list),

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

if flag == "train": ## combine multiple training sets into one dataset

|

| 60 |

+

if len(name_im_gt_list) == 0:

|

| 61 |

+

name_im_gt_list.append(

|

| 62 |

+

{

|

| 63 |

+

"dataset_name": datasets[i]["name"],

|

| 64 |

+

"im_path": tmp_im_list,

|

| 65 |

+

"gt_path": tmp_gt_list,

|

| 66 |

+

"im_ext": datasets[i]["im_ext"],

|

| 67 |

+

"gt_ext": datasets[i]["gt_ext"],

|

| 68 |

+

"cache_dir": datasets[i]["cache_dir"],

|

| 69 |

+

}

|

| 70 |

+

)

|

| 71 |

+

else:

|

| 72 |

+

name_im_gt_list[0]["dataset_name"] = (

|

| 73 |

+

name_im_gt_list[0]["dataset_name"] + "_" + datasets[i]["name"]

|

| 74 |

+

)

|

| 75 |

+

name_im_gt_list[0]["im_path"] = (

|

| 76 |

+

name_im_gt_list[0]["im_path"] + tmp_im_list

|

| 77 |

+

)

|

| 78 |

+

name_im_gt_list[0]["gt_path"] = (

|

| 79 |

+

name_im_gt_list[0]["gt_path"] + tmp_gt_list

|

| 80 |

+

)

|

| 81 |

+

if datasets[i]["im_ext"] != ".jpg" or datasets[i]["gt_ext"] != ".png":

|

| 82 |

+

print(

|

| 83 |

+

"Error: Please make sure all you images and ground truth masks are in jpg and png format respectively !!!"

|

| 84 |

+

)

|

| 85 |

+

exit()

|

| 86 |

+

name_im_gt_list[0]["im_ext"] = ".jpg"

|

| 87 |

+

name_im_gt_list[0]["gt_ext"] = ".png"

|

| 88 |

+

name_im_gt_list[0]["cache_dir"] = (

|

| 89 |

+

os.sep.join(datasets[i]["cache_dir"].split(os.sep)[0:-1])

|

| 90 |

+

+ os.sep

|

| 91 |

+

+ name_im_gt_list[0]["dataset_name"]

|

| 92 |

+

)

|

| 93 |

+

else: ## keep different validation or inference datasets as separate ones

|

| 94 |

+

name_im_gt_list.append(

|

| 95 |

+

{

|

| 96 |

+

"dataset_name": datasets[i]["name"],

|

| 97 |

+

"im_path": tmp_im_list,

|

| 98 |

+

"gt_path": tmp_gt_list,

|

| 99 |

+

"im_ext": datasets[i]["im_ext"],

|

| 100 |

+

"gt_ext": datasets[i]["gt_ext"],

|

| 101 |

+

"cache_dir": datasets[i]["cache_dir"],

|

| 102 |

+

}

|

| 103 |

+

)

|

| 104 |

+

|

| 105 |

+

return name_im_gt_list

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

def create_dataloaders(

|

| 109 |

+

name_im_gt_list,

|

| 110 |

+

cache_size=[],

|

| 111 |

+

cache_boost=True,

|

| 112 |

+

my_transforms=[],

|

| 113 |

+

batch_size=1,

|

| 114 |

+

shuffle=False,

|

| 115 |

+

):

|

| 116 |

+

## model="train": return one dataloader for training

|

| 117 |

+

## model="valid": return a list of dataloaders for validation or testing

|

| 118 |

+

|

| 119 |

+

gos_dataloaders = []

|

| 120 |

+

gos_datasets = []

|

| 121 |

+

|

| 122 |

+

if len(name_im_gt_list) == 0:

|

| 123 |

+

return gos_dataloaders, gos_datasets

|

| 124 |

+

|

| 125 |

+

num_workers_ = 1

|

| 126 |

+

if batch_size > 1:

|

| 127 |

+

num_workers_ = 2

|

| 128 |

+

if batch_size > 4:

|

| 129 |

+

num_workers_ = 4

|

| 130 |

+

if batch_size > 8:

|

| 131 |

+

num_workers_ = 8

|

| 132 |

+

|

| 133 |

+

for i in range(0, len(name_im_gt_list)):

|

| 134 |

+

gos_dataset = GOSDatasetCache(

|

| 135 |

+

[name_im_gt_list[i]],

|

| 136 |

+

cache_size=cache_size,

|

| 137 |

+

cache_path=name_im_gt_list[i]["cache_dir"],

|

| 138 |

+

cache_boost=cache_boost,

|

| 139 |

+

transform=transforms.Compose(my_transforms),

|

| 140 |

+

)

|

| 141 |

+

gos_dataloaders.append(

|

| 142 |

+

DataLoader(

|

| 143 |

+

gos_dataset,

|

| 144 |

+

batch_size=batch_size,

|

| 145 |

+

shuffle=shuffle,

|

| 146 |

+

num_workers=num_workers_,

|

| 147 |

+

)

|

| 148 |

+

)

|

| 149 |

+

gos_datasets.append(gos_dataset)

|

| 150 |

+

|

| 151 |

+

return gos_dataloaders, gos_datasets

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

def im_reader(im_path):

|

| 155 |

+

return io.imread(im_path)

|

| 156 |

+

|

| 157 |

+

|

| 158 |

+

def im_preprocess(im, size):

|

| 159 |

+

if len(im.shape) < 3:

|

| 160 |

+

im = im[:, :, np.newaxis]

|

| 161 |

+

if im.shape[2] == 1:

|

| 162 |

+

im = np.repeat(im, 3, axis=2)

|

| 163 |

+

im_tensor = torch.tensor(im.copy(), dtype=torch.float32)

|

| 164 |

+

im_tensor = torch.transpose(torch.transpose(im_tensor, 1, 2), 0, 1)

|

| 165 |

+

if len(size) < 2:

|

| 166 |

+

return im_tensor, im.shape[0:2]

|

| 167 |

+

else:

|

| 168 |

+

im_tensor = torch.unsqueeze(im_tensor, 0)

|

| 169 |

+

im_tensor = F.upsample(im_tensor, size, mode="bilinear")

|

| 170 |

+

im_tensor = torch.squeeze(im_tensor, 0)

|

| 171 |

+

|

| 172 |

+

return im_tensor.type(torch.uint8), im.shape[0:2]

|

| 173 |

+

|

| 174 |

+

|

| 175 |

+

def gt_preprocess(gt, size):

|

| 176 |

+

if len(gt.shape) > 2:

|

| 177 |

+

gt = gt[:, :, 0]

|

| 178 |

+

|

| 179 |

+

gt_tensor = torch.unsqueeze(torch.tensor(gt, dtype=torch.uint8), 0)

|

| 180 |

+

|

| 181 |

+

if len(size) < 2:

|

| 182 |

+

return gt_tensor.type(torch.uint8), gt.shape[0:2]

|

| 183 |

+

else:

|

| 184 |

+

gt_tensor = torch.unsqueeze(torch.tensor(gt_tensor, dtype=torch.float32), 0)

|

| 185 |

+

gt_tensor = F.upsample(gt_tensor, size, mode="bilinear")

|

| 186 |

+

gt_tensor = torch.squeeze(gt_tensor, 0)

|

| 187 |

+

|

| 188 |

+

return gt_tensor.type(torch.uint8), gt.shape[0:2]

|

| 189 |

+

# return gt_tensor, gt.shape[0:2]

|

| 190 |

+

|

| 191 |

+

|

| 192 |

+

class GOSGridDropout(object):

|

| 193 |

+

def __init__(

|

| 194 |

+

self,

|

| 195 |

+

ratio=0.5,

|

| 196 |

+

unit_size_min=100,

|

| 197 |

+

unit_size_max=100,

|

| 198 |

+

holes_number_x=None,

|

| 199 |

+

holes_number_y=None,

|

| 200 |

+

shift_x=0,

|

| 201 |

+

shift_y=0,

|

| 202 |

+

random_offset=True,

|

| 203 |

+

fill_value=0,

|

| 204 |

+

mask_fill_value=None,

|

| 205 |

+

always_apply=None,

|

| 206 |

+

p=1.0,

|

| 207 |

+

):

|

| 208 |

+

self.transform = A.GridDropout(

|

| 209 |

+

ratio=ratio,

|

| 210 |

+

unit_size_min=unit_size_min,

|

| 211 |

+

unit_size_max=unit_size_max,

|

| 212 |

+

holes_number_x=holes_number_x,

|

| 213 |

+

holes_number_y=holes_number_y,

|

| 214 |

+

shift_x=shift_x,

|

| 215 |

+

shift_y=shift_y,

|

| 216 |

+

random_offset=random_offset,

|

| 217 |

+

fill_value=fill_value,

|

| 218 |

+

mask_fill_value=mask_fill_value,

|

| 219 |

+

always_apply=always_apply,

|

| 220 |

+

p=p,

|

| 221 |

+

)

|

| 222 |

+

|

| 223 |

+

def __call__(self, sample):

|

| 224 |

+

imidx, image, label, shape = (

|

| 225 |

+

sample["imidx"],

|

| 226 |

+

sample["image"],

|

| 227 |

+

sample["label"],

|

| 228 |

+

sample["shape"],

|

| 229 |

+

)

|

| 230 |

+

|

| 231 |

+

# Convert the torch tensors to numpy arrays

|

| 232 |

+

image_np = image.permute(1, 2, 0).numpy()

|

| 233 |

+

|

| 234 |

+

augmented = self.transform(image=image_np)

|

| 235 |

+

|

| 236 |

+

# Convert the numpy arrays back to torch tensors

|

| 237 |

+

image = torch.tensor(augmented["image"]).permute(2, 0, 1)

|

| 238 |

+

|

| 239 |

+

return {"imidx": imidx, "image": image, "label": label, "shape": shape}

|

| 240 |

+

|

| 241 |

+

|

| 242 |

+

class GOSRandomHFlip(object):

|

| 243 |

+

def __init__(self, prob=0.5):

|

| 244 |

+

self.prob = prob

|

| 245 |

+

|

| 246 |

+

def __call__(self, sample):

|

| 247 |

+

imidx, image, label, shape = (

|

| 248 |

+

sample["imidx"],

|

| 249 |

+

sample["image"],

|

| 250 |

+

sample["label"],

|

| 251 |

+

sample["shape"],

|

| 252 |

+

)

|

| 253 |

+

|

| 254 |

+

# random horizontal flip

|

| 255 |

+

if random.random() >= self.prob:

|

| 256 |

+

image = torch.flip(image, dims=[2])

|

| 257 |

+

label = torch.flip(label, dims=[2])

|

| 258 |

+

|

| 259 |

+

return {"imidx": imidx, "image": image, "label": label, "shape": shape}

|

| 260 |

+

|

| 261 |

+

|

| 262 |

+

class GOSDatasetCache(Dataset):

|

| 263 |

+

|

| 264 |

+

def __init__(

|

| 265 |

+

self,

|

| 266 |

+

name_im_gt_list,

|

| 267 |

+

cache_size=[],

|

| 268 |

+

cache_path="./cache",

|

| 269 |

+

cache_file_name="dataset.json",

|

| 270 |

+

cache_boost=False,

|

| 271 |

+

transform=None,

|

| 272 |

+

):

|

| 273 |

+

|

| 274 |

+

self.cache_size = cache_size

|

| 275 |

+

self.cache_path = cache_path

|

| 276 |

+

self.cache_file_name = cache_file_name

|

| 277 |

+

self.cache_boost_name = ""

|

| 278 |

+

|

| 279 |

+

self.cache_boost = cache_boost

|

| 280 |

+

# self.ims_npy = None

|

| 281 |

+

# self.gts_npy = None

|

| 282 |

+

|

| 283 |

+

## cache all the images and ground truth into a single pytorch tensor

|

| 284 |

+

self.ims_pt = None

|

| 285 |

+

self.gts_pt = None

|

| 286 |

+

|

| 287 |

+

## we will cache the npy as well regardless of the cache_boost

|

| 288 |

+

# if(self.cache_boost):

|

| 289 |

+

self.cache_boost_name = cache_file_name.split(".json")[0]

|

| 290 |

+

|

| 291 |

+

self.transform = transform

|

| 292 |

+

|

| 293 |

+

self.dataset = {}

|

| 294 |

+

|

| 295 |

+

## combine different datasets into one

|

| 296 |

+

dataset_names = []

|

| 297 |

+

dt_name_list = [] # dataset name per image

|

| 298 |

+

im_name_list = [] # image name

|

| 299 |

+

im_path_list = [] # im path

|

| 300 |

+

gt_path_list = [] # gt path

|

| 301 |

+

im_ext_list = [] # im ext

|

| 302 |

+

gt_ext_list = [] # gt ext

|

| 303 |

+

for i in range(0, len(name_im_gt_list)):

|

| 304 |

+

dataset_names.append(name_im_gt_list[i]["dataset_name"])

|

| 305 |

+

# dataset name repeated based on the number of images in this dataset

|

| 306 |

+

dt_name_list.extend(

|

| 307 |

+

[

|

| 308 |

+

name_im_gt_list[i]["dataset_name"]

|

| 309 |

+

for x in name_im_gt_list[i]["im_path"]

|

| 310 |

+

]

|

| 311 |

+

)

|

| 312 |

+

im_name_list.extend(

|

| 313 |

+

[

|

| 314 |

+

x.split(os.sep)[-1].split(name_im_gt_list[i]["im_ext"])[0]

|

| 315 |

+

for x in name_im_gt_list[i]["im_path"]

|

| 316 |

+

]

|

| 317 |

+

)

|

| 318 |

+

im_path_list.extend(name_im_gt_list[i]["im_path"])

|

| 319 |

+

gt_path_list.extend(name_im_gt_list[i]["gt_path"])

|

| 320 |

+

im_ext_list.extend(

|

| 321 |

+

[name_im_gt_list[i]["im_ext"] for x in name_im_gt_list[i]["im_path"]]

|

| 322 |

+

)

|

| 323 |

+

gt_ext_list.extend(

|

| 324 |

+

[name_im_gt_list[i]["gt_ext"] for x in name_im_gt_list[i]["gt_path"]]

|

| 325 |

+

)

|

| 326 |

+

|

| 327 |

+

self.dataset["data_name"] = dt_name_list

|

| 328 |

+

self.dataset["im_name"] = im_name_list

|

| 329 |

+

self.dataset["im_path"] = im_path_list

|

| 330 |

+

self.dataset["ori_im_path"] = deepcopy(im_path_list)

|

| 331 |

+

self.dataset["gt_path"] = gt_path_list

|

| 332 |

+

self.dataset["ori_gt_path"] = deepcopy(gt_path_list)

|

| 333 |

+

self.dataset["im_shp"] = []

|

| 334 |

+

self.dataset["gt_shp"] = []

|

| 335 |

+

self.dataset["im_ext"] = im_ext_list

|

| 336 |

+

self.dataset["gt_ext"] = gt_ext_list

|

| 337 |

+

|

| 338 |

+

self.dataset["ims_pt_dir"] = ""

|

| 339 |

+

self.dataset["gts_pt_dir"] = ""

|

| 340 |

+

|

| 341 |

+

self.dataset = self.manage_cache(dataset_names)

|

| 342 |

+

|