Spaces:

Running

on

Zero

Running

on

Zero

Feature(MInference): build demo

Browse files- .gitignore +415 -0

- LICENSE +21 -0

- README.md +130 -1

- app.py +145 -4

- images/MInference1_onepage.png +0 -0

- images/MInference_logo.png +0 -0

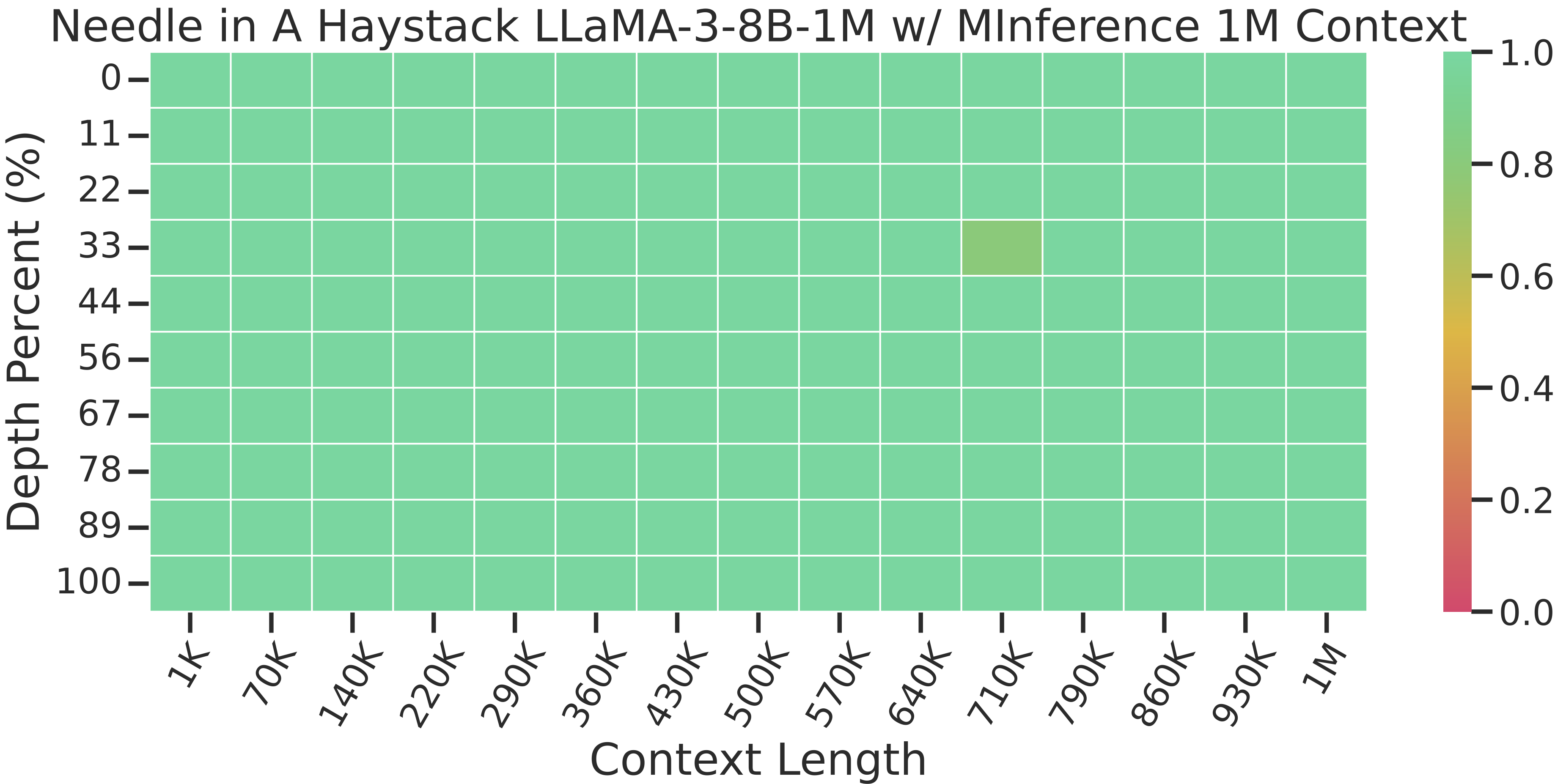

- images/benchmarks/needle_viz_LLaMA-3-8B-1M_ours_1K_1000K.png +0 -0

- images/benchmarks/ppl-LLaMA-3-262k.png +0 -0

- minference/__init__.py +27 -0

- minference/configs/Llama_3_8B_Instruct_262k_kv_out_v32_fit_o_best_pattern.json +1 -0

- minference/configs/Phi_3_mini_128k_instruct_kv_out_v32_fit_o_best_pattern.json +1 -0

- minference/configs/Yi_9B_200k_kv_out_v32_fit_o_best_pattern.json +1 -0

- minference/configs/model2path.py +17 -0

- minference/minference_configuration.py +49 -0

- minference/models_patch.py +100 -0

- minference/modules/inf_llm.py +1296 -0

- minference/modules/minference_forward.py +855 -0

- minference/modules/snap_kv.py +422 -0

- minference/ops/block_sparse_flash_attention.py +464 -0

- minference/ops/pit_sparse_flash_attention.py +740 -0

- minference/ops/pit_sparse_flash_attention_v2.py +735 -0

- minference/ops/streaming_kernel.py +763 -0

- minference/patch.py +1279 -0

- minference/version.py +14 -0

- requirements.txt +5 -0

.gitignore

ADDED

|

@@ -0,0 +1,415 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## Ignore Visual Studio temporary files, build results, and

|

| 2 |

+

## files generated by popular Visual Studio add-ons.

|

| 3 |

+

##

|

| 4 |

+

## Get latest from https://github.com/github/gitignore/blob/main/VisualStudio.gitignore

|

| 5 |

+

|

| 6 |

+

# User-specific files

|

| 7 |

+

*.rsuser

|

| 8 |

+

*.suo

|

| 9 |

+

*.user

|

| 10 |

+

*.userosscache

|

| 11 |

+

*.sln.docstates

|

| 12 |

+

|

| 13 |

+

# User-specific files (MonoDevelop/Xamarin Studio)

|

| 14 |

+

*.userprefs

|

| 15 |

+

|

| 16 |

+

# Mono auto generated files

|

| 17 |

+

mono_crash.*

|

| 18 |

+

|

| 19 |

+

# Build results

|

| 20 |

+

[Dd]ebug/

|

| 21 |

+

[Dd]ebugPublic/

|

| 22 |

+

[Rr]elease/

|

| 23 |

+

[Rr]eleases/

|

| 24 |

+

x64/

|

| 25 |

+

x86/

|

| 26 |

+

[Ww][Ii][Nn]32/

|

| 27 |

+

[Aa][Rr][Mm]/

|

| 28 |

+

[Aa][Rr][Mm]64/

|

| 29 |

+

bld/

|

| 30 |

+

[Bb]in/

|

| 31 |

+

[Oo]bj/

|

| 32 |

+

[Ll]og/

|

| 33 |

+

[Ll]ogs/

|

| 34 |

+

|

| 35 |

+

# Visual Studio 2015/2017 cache/options directory

|

| 36 |

+

.vs/

|

| 37 |

+

# Uncomment if you have tasks that create the project's static files in wwwroot

|

| 38 |

+

#wwwroot/

|

| 39 |

+

|

| 40 |

+

# Visual Studio 2017 auto generated files

|

| 41 |

+

Generated\ Files/

|

| 42 |

+

|

| 43 |

+

# MSTest test Results

|

| 44 |

+

[Tt]est[Rr]esult*/

|

| 45 |

+

[Bb]uild[Ll]og.*

|

| 46 |

+

|

| 47 |

+

# NUnit

|

| 48 |

+

*.VisualState.xml

|

| 49 |

+

TestResult.xml

|

| 50 |

+

nunit-*.xml

|

| 51 |

+

|

| 52 |

+

# Build Results of an ATL Project

|

| 53 |

+

[Dd]ebugPS/

|

| 54 |

+

[Rr]eleasePS/

|

| 55 |

+

dlldata.c

|

| 56 |

+

|

| 57 |

+

# Benchmark Results

|

| 58 |

+

BenchmarkDotNet.Artifacts/

|

| 59 |

+

|

| 60 |

+

# .NET Core

|

| 61 |

+

project.lock.json

|

| 62 |

+

project.fragment.lock.json

|

| 63 |

+

artifacts/

|

| 64 |

+

|

| 65 |

+

# ASP.NET Scaffolding

|

| 66 |

+

ScaffoldingReadMe.txt

|

| 67 |

+

|

| 68 |

+

# StyleCop

|

| 69 |

+

StyleCopReport.xml

|

| 70 |

+

|

| 71 |

+

# Files built by Visual Studio

|

| 72 |

+

*_i.c

|

| 73 |

+

*_p.c

|

| 74 |

+

*_h.h

|

| 75 |

+

*.ilk

|

| 76 |

+

*.meta

|

| 77 |

+

*.obj

|

| 78 |

+

*.iobj

|

| 79 |

+

*.pch

|

| 80 |

+

*.pdb

|

| 81 |

+

*.ipdb

|

| 82 |

+

*.pgc

|

| 83 |

+

*.pgd

|

| 84 |

+

*.rsp

|

| 85 |

+

*.sbr

|

| 86 |

+

*.tlb

|

| 87 |

+

*.tli

|

| 88 |

+

*.tlh

|

| 89 |

+

*.tmp

|

| 90 |

+

*.tmp_proj

|

| 91 |

+

*_wpftmp.csproj

|

| 92 |

+

*.log

|

| 93 |

+

*.tlog

|

| 94 |

+

*.vspscc

|

| 95 |

+

*.vssscc

|

| 96 |

+

.builds

|

| 97 |

+

*.pidb

|

| 98 |

+

*.svclog

|

| 99 |

+

*.scc

|

| 100 |

+

|

| 101 |

+

# Chutzpah Test files

|

| 102 |

+

_Chutzpah*

|

| 103 |

+

|

| 104 |

+

# Visual C++ cache files

|

| 105 |

+

ipch/

|

| 106 |

+

*.aps

|

| 107 |

+

*.ncb

|

| 108 |

+

*.opendb

|

| 109 |

+

*.opensdf

|

| 110 |

+

*.sdf

|

| 111 |

+

*.cachefile

|

| 112 |

+

*.VC.db

|

| 113 |

+

*.VC.VC.opendb

|

| 114 |

+

|

| 115 |

+

# Visual Studio profiler

|

| 116 |

+

*.psess

|

| 117 |

+

*.vsp

|

| 118 |

+

*.vspx

|

| 119 |

+

*.sap

|

| 120 |

+

|

| 121 |

+

# Visual Studio Trace Files

|

| 122 |

+

*.e2e

|

| 123 |

+

|

| 124 |

+

# TFS 2012 Local Workspace

|

| 125 |

+

$tf/

|

| 126 |

+

|

| 127 |

+

# Guidance Automation Toolkit

|

| 128 |

+

*.gpState

|

| 129 |

+

|

| 130 |

+

# ReSharper is a .NET coding add-in

|

| 131 |

+

_ReSharper*/

|

| 132 |

+

*.[Rr]e[Ss]harper

|

| 133 |

+

*.DotSettings.user

|

| 134 |

+

|

| 135 |

+

# TeamCity is a build add-in

|

| 136 |

+

_TeamCity*

|

| 137 |

+

|

| 138 |

+

# DotCover is a Code Coverage Tool

|

| 139 |

+

*.dotCover

|

| 140 |

+

|

| 141 |

+

# AxoCover is a Code Coverage Tool

|

| 142 |

+

.axoCover/*

|

| 143 |

+

!.axoCover/settings.json

|

| 144 |

+

|

| 145 |

+

# Coverlet is a free, cross platform Code Coverage Tool

|

| 146 |

+

coverage*.json

|

| 147 |

+

coverage*.xml

|

| 148 |

+

coverage*.info

|

| 149 |

+

|

| 150 |

+

# Visual Studio code coverage results

|

| 151 |

+

*.coverage

|

| 152 |

+

*.coveragexml

|

| 153 |

+

|

| 154 |

+

# NCrunch

|

| 155 |

+

_NCrunch_*

|

| 156 |

+

.*crunch*.local.xml

|

| 157 |

+

nCrunchTemp_*

|

| 158 |

+

|

| 159 |

+

# MightyMoose

|

| 160 |

+

*.mm.*

|

| 161 |

+

AutoTest.Net/

|

| 162 |

+

|

| 163 |

+

# Web workbench (sass)

|

| 164 |

+

.sass-cache/

|

| 165 |

+

|

| 166 |

+

# Installshield output folder

|

| 167 |

+

[Ee]xpress/

|

| 168 |

+

|

| 169 |

+

# DocProject is a documentation generator add-in

|

| 170 |

+

DocProject/buildhelp/

|

| 171 |

+

DocProject/Help/*.HxT

|

| 172 |

+

DocProject/Help/*.HxC

|

| 173 |

+

DocProject/Help/*.hhc

|

| 174 |

+

DocProject/Help/*.hhk

|

| 175 |

+

DocProject/Help/*.hhp

|

| 176 |

+

DocProject/Help/Html2

|

| 177 |

+

DocProject/Help/html

|

| 178 |

+

|

| 179 |

+

# Click-Once directory

|

| 180 |

+

publish/

|

| 181 |

+

|

| 182 |

+

# Publish Web Output

|

| 183 |

+

*.[Pp]ublish.xml

|

| 184 |

+

*.azurePubxml

|

| 185 |

+

# Note: Comment the next line if you want to checkin your web deploy settings,

|

| 186 |

+

# but database connection strings (with potential passwords) will be unencrypted

|

| 187 |

+

*.pubxml

|

| 188 |

+

*.publishproj

|

| 189 |

+

|

| 190 |

+

# Microsoft Azure Web App publish settings. Comment the next line if you want to

|

| 191 |

+

# checkin your Azure Web App publish settings, but sensitive information contained

|

| 192 |

+

# in these scripts will be unencrypted

|

| 193 |

+

PublishScripts/

|

| 194 |

+

|

| 195 |

+

# NuGet Packages

|

| 196 |

+

*.nupkg

|

| 197 |

+

# NuGet Symbol Packages

|

| 198 |

+

*.snupkg

|

| 199 |

+

# The packages folder can be ignored because of Package Restore

|

| 200 |

+

**/[Pp]ackages/*

|

| 201 |

+

# except build/, which is used as an MSBuild target.

|

| 202 |

+

!**/[Pp]ackages/build/

|

| 203 |

+

# Uncomment if necessary however generally it will be regenerated when needed

|

| 204 |

+

#!**/[Pp]ackages/repositories.config

|

| 205 |

+

# NuGet v3's project.json files produces more ignorable files

|

| 206 |

+

*.nuget.props

|

| 207 |

+

*.nuget.targets

|

| 208 |

+

|

| 209 |

+

# Microsoft Azure Build Output

|

| 210 |

+

csx/

|

| 211 |

+

*.build.csdef

|

| 212 |

+

|

| 213 |

+

# Microsoft Azure Emulator

|

| 214 |

+

ecf/

|

| 215 |

+

rcf/

|

| 216 |

+

|

| 217 |

+

# Windows Store app package directories and files

|

| 218 |

+

AppPackages/

|

| 219 |

+

BundleArtifacts/

|

| 220 |

+

Package.StoreAssociation.xml

|

| 221 |

+

_pkginfo.txt

|

| 222 |

+

*.appx

|

| 223 |

+

*.appxbundle

|

| 224 |

+

*.appxupload

|

| 225 |

+

|

| 226 |

+

# Visual Studio cache files

|

| 227 |

+

# files ending in .cache can be ignored

|

| 228 |

+

*.[Cc]ache

|

| 229 |

+

# but keep track of directories ending in .cache

|

| 230 |

+

!?*.[Cc]ache/

|

| 231 |

+

|

| 232 |

+

# Others

|

| 233 |

+

ClientBin/

|

| 234 |

+

~$*

|

| 235 |

+

*~

|

| 236 |

+

*.dbmdl

|

| 237 |

+

*.dbproj.schemaview

|

| 238 |

+

*.jfm

|

| 239 |

+

*.pfx

|

| 240 |

+

*.publishsettings

|

| 241 |

+

orleans.codegen.cs

|

| 242 |

+

|

| 243 |

+

# Including strong name files can present a security risk

|

| 244 |

+

# (https://github.com/github/gitignore/pull/2483#issue-259490424)

|

| 245 |

+

#*.snk

|

| 246 |

+

|

| 247 |

+

# Since there are multiple workflows, uncomment next line to ignore bower_components

|

| 248 |

+

# (https://github.com/github/gitignore/pull/1529#issuecomment-104372622)

|

| 249 |

+

#bower_components/

|

| 250 |

+

|

| 251 |

+

# RIA/Silverlight projects

|

| 252 |

+

Generated_Code/

|

| 253 |

+

|

| 254 |

+

# Backup & report files from converting an old project file

|

| 255 |

+

# to a newer Visual Studio version. Backup files are not needed,

|

| 256 |

+

# because we have git ;-)

|

| 257 |

+

_UpgradeReport_Files/

|

| 258 |

+

Backup*/

|

| 259 |

+

UpgradeLog*.XML

|

| 260 |

+

UpgradeLog*.htm

|

| 261 |

+

ServiceFabricBackup/

|

| 262 |

+

*.rptproj.bak

|

| 263 |

+

|

| 264 |

+

# SQL Server files

|

| 265 |

+

*.mdf

|

| 266 |

+

*.ldf

|

| 267 |

+

*.ndf

|

| 268 |

+

|

| 269 |

+

# Business Intelligence projects

|

| 270 |

+

*.rdl.data

|

| 271 |

+

*.bim.layout

|

| 272 |

+

*.bim_*.settings

|

| 273 |

+

*.rptproj.rsuser

|

| 274 |

+

*- [Bb]ackup.rdl

|

| 275 |

+

*- [Bb]ackup ([0-9]).rdl

|

| 276 |

+

*- [Bb]ackup ([0-9][0-9]).rdl

|

| 277 |

+

|

| 278 |

+

# Microsoft Fakes

|

| 279 |

+

FakesAssemblies/

|

| 280 |

+

|

| 281 |

+

# GhostDoc plugin setting file

|

| 282 |

+

*.GhostDoc.xml

|

| 283 |

+

|

| 284 |

+

# Node.js Tools for Visual Studio

|

| 285 |

+

.ntvs_analysis.dat

|

| 286 |

+

node_modules/

|

| 287 |

+

|

| 288 |

+

# Visual Studio 6 build log

|

| 289 |

+

*.plg

|

| 290 |

+

|

| 291 |

+

# Visual Studio 6 workspace options file

|

| 292 |

+

*.opt

|

| 293 |

+

|

| 294 |

+

# Visual Studio 6 auto-generated workspace file (contains which files were open etc.)

|

| 295 |

+

*.vbw

|

| 296 |

+

|

| 297 |

+

# Visual Studio 6 auto-generated project file (contains which files were open etc.)

|

| 298 |

+

*.vbp

|

| 299 |

+

|

| 300 |

+

# Visual Studio 6 workspace and project file (working project files containing files to include in project)

|

| 301 |

+

*.dsw

|

| 302 |

+

*.dsp

|

| 303 |

+

|

| 304 |

+

# Visual Studio 6 technical files

|

| 305 |

+

*.ncb

|

| 306 |

+

*.aps

|

| 307 |

+

|

| 308 |

+

# Visual Studio LightSwitch build output

|

| 309 |

+

**/*.HTMLClient/GeneratedArtifacts

|

| 310 |

+

**/*.DesktopClient/GeneratedArtifacts

|

| 311 |

+

**/*.DesktopClient/ModelManifest.xml

|

| 312 |

+

**/*.Server/GeneratedArtifacts

|

| 313 |

+

**/*.Server/ModelManifest.xml

|

| 314 |

+

_Pvt_Extensions

|

| 315 |

+

|

| 316 |

+

# Paket dependency manager

|

| 317 |

+

.paket/paket.exe

|

| 318 |

+

paket-files/

|

| 319 |

+

|

| 320 |

+

# FAKE - F# Make

|

| 321 |

+

.fake/

|

| 322 |

+

|

| 323 |

+

# CodeRush personal settings

|

| 324 |

+

.cr/personal

|

| 325 |

+

|

| 326 |

+

# Python Tools for Visual Studio (PTVS)

|

| 327 |

+

__pycache__/

|

| 328 |

+

*.pyc

|

| 329 |

+

|

| 330 |

+

# Cake - Uncomment if you are using it

|

| 331 |

+

# tools/**

|

| 332 |

+

# !tools/packages.config

|

| 333 |

+

|

| 334 |

+

# Tabs Studio

|

| 335 |

+

*.tss

|

| 336 |

+

|

| 337 |

+

# Telerik's JustMock configuration file

|

| 338 |

+

*.jmconfig

|

| 339 |

+

|

| 340 |

+

# BizTalk build output

|

| 341 |

+

*.btp.cs

|

| 342 |

+

*.btm.cs

|

| 343 |

+

*.odx.cs

|

| 344 |

+

*.xsd.cs

|

| 345 |

+

|

| 346 |

+

# OpenCover UI analysis results

|

| 347 |

+

OpenCover/

|

| 348 |

+

|

| 349 |

+

# Azure Stream Analytics local run output

|

| 350 |

+

ASALocalRun/

|

| 351 |

+

|

| 352 |

+

# MSBuild Binary and Structured Log

|

| 353 |

+

*.binlog

|

| 354 |

+

|

| 355 |

+

# NVidia Nsight GPU debugger configuration file

|

| 356 |

+

*.nvuser

|

| 357 |

+

|

| 358 |

+

# MFractors (Xamarin productivity tool) working folder

|

| 359 |

+

.mfractor/

|

| 360 |

+

|

| 361 |

+

# Local History for Visual Studio

|

| 362 |

+

.localhistory/

|

| 363 |

+

|

| 364 |

+

# Visual Studio History (VSHistory) files

|

| 365 |

+

.vshistory/

|

| 366 |

+

|

| 367 |

+

# BeatPulse healthcheck temp database

|

| 368 |

+

healthchecksdb

|

| 369 |

+

|

| 370 |

+

# Backup folder for Package Reference Convert tool in Visual Studio 2017

|

| 371 |

+

MigrationBackup/

|

| 372 |

+

|

| 373 |

+

# Ionide (cross platform F# VS Code tools) working folder

|

| 374 |

+

.ionide/

|

| 375 |

+

|

| 376 |

+

# Fody - auto-generated XML schema

|

| 377 |

+

FodyWeavers.xsd

|

| 378 |

+

|

| 379 |

+

# VS Code files for those working on multiple tools

|

| 380 |

+

.vscode/*

|

| 381 |

+

!.vscode/settings.json

|

| 382 |

+

!.vscode/tasks.json

|

| 383 |

+

!.vscode/launch.json

|

| 384 |

+

!.vscode/extensions.json

|

| 385 |

+

*.code-workspace

|

| 386 |

+

|

| 387 |

+

# Local History for Visual Studio Code

|

| 388 |

+

.history/

|

| 389 |

+

|

| 390 |

+

# Windows Installer files from build outputs

|

| 391 |

+

*.cab

|

| 392 |

+

*.msi

|

| 393 |

+

*.msix

|

| 394 |

+

*.msm

|

| 395 |

+

*.msp

|

| 396 |

+

|

| 397 |

+

# JetBrains Rider

|

| 398 |

+

*.sln.iml

|

| 399 |

+

|

| 400 |

+

# Experiments

|

| 401 |

+

data

|

| 402 |

+

!experiments/ruler/data

|

| 403 |

+

needle

|

| 404 |

+

results

|

| 405 |

+

*.json

|

| 406 |

+

*.jsonl

|

| 407 |

+

.vscode/

|

| 408 |

+

*.pt

|

| 409 |

+

*.pkl

|

| 410 |

+

!minference/configs/*

|

| 411 |

+

|

| 412 |

+

__pycache__

|

| 413 |

+

build/

|

| 414 |

+

*.egg-info/

|

| 415 |

+

*.so

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) Microsoft Corporation.

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE

|

README.md

CHANGED

|

@@ -10,4 +10,133 @@ pinned: false

|

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

+

<div style="display: flex; align-items: center;">

|

| 14 |

+

<div style="width: 100px; margin-right: 10px; height:auto;" align="left">

|

| 15 |

+

<img src="images/MInference_logo.png" alt="MInference" width="100" align="left">

|

| 16 |

+

</div>

|

| 17 |

+

<div style="flex-grow: 1;" align="center">

|

| 18 |

+

<h2 align="center">MInference: Million-Tokens Prompt Inference for LLMs</h2>

|

| 19 |

+

</div>

|

| 20 |

+

</div>

|

| 21 |

+

|

| 22 |

+

<p align="center">

|

| 23 |

+

| <a href="https://llmlingua.com/"><b>Project Page</b></a> |

|

| 24 |

+

<a href="https://arxiv.org/abs/2406."><b>Paper</b></a> |

|

| 25 |

+

<a href="https://huggingface.co/spaces/microsoft/MInference"><b>Demo</b></a> |

|

| 26 |

+

</p>

|

| 27 |

+

|

| 28 |

+

https://github.com/microsoft/MInference/assets/30883354/52613efc-738f-4081-8367-7123c81d6b19

|

| 29 |

+

|

| 30 |

+

## TL;DR

|

| 31 |

+

|

| 32 |

+

**MInference 1.0** leverages the dynamic sparse nature of LLMs' attention, which exhibits some static patterns, to speed up the pre-filling for long-context LLMs. It first determines offline which sparse pattern each head belongs to, then approximates the sparse index online and dynamically computes attention with the optimal custom kernels. This approach achieves up to a **10x speedup** for pre-filling on an A100 while maintaining accuracy.

|

| 33 |

+

|

| 34 |

+

- [MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention](https://arxiv.org/abs/2406.) (Under Review)<br>

|

| 35 |

+

_Huiqiang Jiang†, Yucheng Li†, Chengruidong Zhang†, Qianhui Wu, Xufang Luo, Surin Ahn, Zhenhua Han, Amir H. Abdi, Dongsheng Li, Chin-Yew Lin, Yuqing Yang and Lili Qiu_

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

## 🎥 Overview

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

## 🎯 Quick Start

|

| 43 |

+

|

| 44 |

+

### Requirements

|

| 45 |

+

|

| 46 |

+

- Torch

|

| 47 |

+

- FlashAttention-2

|

| 48 |

+

- Triton == 2.1.0

|

| 49 |

+

|

| 50 |

+

To get started with MInference, simply install it using pip:

|

| 51 |

+

|

| 52 |

+

```bash

|

| 53 |

+

pip install minference

|

| 54 |

+

```

|

| 55 |

+

|

| 56 |

+

### How to use MInference

|

| 57 |

+

|

| 58 |

+

for HF,

|

| 59 |

+

```diff

|

| 60 |

+

from transformers import pipeline

|

| 61 |

+

+from minference import MInference

|

| 62 |

+

|

| 63 |

+

pipe = pipeline("text-generation", model=model_name, torch_dtype="auto", device_map="auto")

|

| 64 |

+

|

| 65 |

+

# Patch MInference Module

|

| 66 |

+

+minference_patch = MInference("minference", model_name)

|

| 67 |

+

+pipe.model = minference_patch(pipe.model)

|

| 68 |

+

|

| 69 |

+

pipe(prompt, max_length=10)

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

for vLLM,

|

| 73 |

+

|

| 74 |

+

```diff

|

| 75 |

+

from vllm import LLM, SamplingParams

|

| 76 |

+

+ from minference import MInference

|

| 77 |

+

|

| 78 |

+

llm = LLM(model_name, max_num_seqs=1, enforce_eager=True, max_model_len=128000)

|

| 79 |

+

|

| 80 |

+

# Patch MInference Module

|

| 81 |

+

+minference_patch = MInference("vllm", model_name)

|

| 82 |

+

+llm = minference_patch(llm)

|

| 83 |

+

|

| 84 |

+

outputs = llm.generate(prompts, sampling_params)

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

## FAQ

|

| 88 |

+

|

| 89 |

+

For more insights and answers, visit our [FAQ section](./Transparency_FAQ.md).

|

| 90 |

+

|

| 91 |

+

**Q1: How to effectively evaluate the impact of dynamic sparse attention on the capabilities of long-context LLMs?**

|

| 92 |

+

|

| 93 |

+

To effectively evaluate long-context LLM capabilities, we tested: 1) effective context window with RULER, 2) general long-context tasks with InfiniteBench, 3) retrieval tasks across different contexts and positions with Needle in a Haystack, and 4) language model prediction with PG-19.<br/>

|

| 94 |

+

We found that traditional methods perform poorly in retrieval tasks, with difficulty levels varying as follows: KV retrieval (every key as a needle) > Needle in a Haystack > Retrieval.Number > Retrieval PassKey. The key challenge is the semantic difference between needles and the haystack. Traditional methods perform better when the semantic difference is larger, as in passkey tasks. KV retrieval demands higher retrieval capabilities since any key can be a target, and multi-needle tasks are even more complex.<br/>

|

| 95 |

+

We will continue to update our results with more models and datasets in future versions.

|

| 96 |

+

|

| 97 |

+

**Q2: Does this dynamic sparse attention pattern only exist in long-context LLMs that are not fully trained?**

|

| 98 |

+

|

| 99 |

+

Firstly, attention is dynamically sparse, and this is true for both short- and long-contexts, a characteristic inherent to the attention mechanism.

|

| 100 |

+

Additionally, we selected the state-of-the-art open-source long-context LLM, LLaMA-3-8B-Instruct-1M, which has an effective context window size of 16K. With MInference, this can be extended to 32K.

|

| 101 |

+

We will continue to adapt our method to other advanced long-context LLMs and update our results. We will also explore the theoretical reasons behind this dynamic sparse attention pattern.

|

| 102 |

+

|

| 103 |

+

**Q3: What is the relationship between MInference, SSM, Linear Attention, and Sparse Attention?**

|

| 104 |

+

|

| 105 |

+

All four approaches (MInference, SSM, Linear Attention, and Sparse Attention) are efficient solutions for optimizing the high complexity of attention in Transformers, each introducing inductive bias from different perspectives. Notably, the latter three require training from scratch.

|

| 106 |

+

Additionally, recent works like Mamba-2 and Unified Implicit Attention Representation unify SSM and Linear Attention as static sparse attention. Mamba-2 itself is a block-wise sparse attention method.

|

| 107 |

+

Intuitively, the significant sparse redundancy in attention suggests that these approaches have potential. However, static sparse attention may not handle dynamic semantic associations well, especially in complex tasks. Dynamic sparse attention, on the other hand, holds potential for better managing these dynamic relationships.

|

| 108 |

+

|

| 109 |

+

## Citation

|

| 110 |

+

|

| 111 |

+

If you find MInference useful or relevant to your project and research, please kindly cite our paper:

|

| 112 |

+

|

| 113 |

+

```bibtex

|

| 114 |

+

@article{jiang2024minference,

|

| 115 |

+

title={MInference 1.0: Accelerating Pre-filling for Long-Context LLMs via Dynamic Sparse Attention},

|

| 116 |

+

author={Jiang, Huiqiang and Li, Yucheng and Zhang, Chengruidong and Wu, Qianhui and Luo, Xufang and Ahn, Surin and Han, Zhenhua and Abdi, Amir H and Li, Dongsheng and Lin, Chin-Yew and Yang, Yuqing and Qiu, Lili},

|

| 117 |

+

journal={arXiv},

|

| 118 |

+

year={2024}

|

| 119 |

+

}

|

| 120 |

+

```

|

| 121 |

+

|

| 122 |

+

## Contributing

|

| 123 |

+

|

| 124 |

+

This project welcomes contributions and suggestions. Most contributions require you to agree to a

|

| 125 |

+

Contributor License Agreement (CLA) declaring that you have the right to, and actually do, grant us

|

| 126 |

+

the rights to use your contribution. For details, visit https://cla.opensource.microsoft.com.

|

| 127 |

+

|

| 128 |

+

When you submit a pull request, a CLA bot will automatically determine whether you need to provide

|

| 129 |

+

a CLA and decorate the PR appropriately (e.g., status check, comment). Simply follow the instructions

|

| 130 |

+

provided by the bot. You will only need to do this once across all repos using our CLA.

|

| 131 |

+

|

| 132 |

+

This project has adopted the [Microsoft Open Source Code of Conduct](https://opensource.microsoft.com/codeofconduct/).

|

| 133 |

+

For more information see the [Code of Conduct FAQ](https://opensource.microsoft.com/codeofconduct/faq/) or

|

| 134 |

+

contact [[email protected]](mailto:[email protected]) with any additional questions or comments.

|

| 135 |

+

|

| 136 |

+

## Trademarks

|

| 137 |

+

|

| 138 |

+

This project may contain trademarks or logos for projects, products, or services. Authorized use of Microsoft

|

| 139 |

+

trademarks or logos is subject to and must follow

|

| 140 |

+

[Microsoft's Trademark & Brand Guidelines](https://www.microsoft.com/en-us/legal/intellectualproperty/trademarks/usage/general).

|

| 141 |

+

Use of Microsoft trademarks or logos in modified versions of this project must not cause confusion or imply Microsoft sponsorship.

|

| 142 |

+

Any use of third-party trademarks or logos are subject to those third-party's policies.

|

app.py

CHANGED

|

@@ -1,7 +1,148 @@

|

|

| 1 |

import gradio as gr

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 2 |

|

| 3 |

-

|

| 4 |

-

|

| 5 |

|

| 6 |

-

|

| 7 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

+

import os

|

| 3 |

+

import spaces

|

| 4 |

+

from transformers import GemmaTokenizer, AutoModelForCausalLM

|

| 5 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer, TextIteratorStreamer

|

| 6 |

+

from threading import Thread

|

| 7 |

+

from minference import MInference

|

| 8 |

|

| 9 |

+

# Set an environment variable

|

| 10 |

+

HF_TOKEN = os.environ.get("HF_TOKEN", None)

|

| 11 |

|

| 12 |

+

|

| 13 |

+

DESCRIPTION = '''

|

| 14 |

+

<div>

|

| 15 |

+

<h1 style="text-align: center;">Meta Llama3 8B</h1>

|

| 16 |

+

<p>This Space demonstrates the instruction-tuned model <a href="https://huggingface.co/meta-llama/Meta-Llama-3-8B-Instruct"><b>Meta Llama3 8b Chat</b></a>. Meta Llama3 is the new open LLM and comes in two sizes: 8b and 70b. Feel free to play with it, or duplicate to run privately!</p>

|

| 17 |

+

<p>🔎 For more details about the Llama3 release and how to use the model with <code>transformers</code>, take a look <a href="https://huggingface.co/blog/llama3">at our blog post</a>.</p>

|

| 18 |

+

<p>🦕 Looking for an even more powerful model? Check out the <a href="https://huggingface.co/chat/"><b>Hugging Chat</b></a> integration for Meta Llama 3 70b</p>

|

| 19 |

+

</div>

|

| 20 |

+

'''

|

| 21 |

+

|

| 22 |

+

LICENSE = """

|

| 23 |

+

<p/>

|

| 24 |

+

---

|

| 25 |

+

Built with Meta Llama 3

|

| 26 |

+

"""

|

| 27 |

+

|

| 28 |

+

PLACEHOLDER = """

|

| 29 |

+

<div style="padding: 30px; text-align: center; display: flex; flex-direction: column; align-items: center;">

|

| 30 |

+

<img src="https://ysharma-dummy-chat-app.hf.space/file=/tmp/gradio/8e75e61cc9bab22b7ce3dec85ab0e6db1da5d107/Meta_lockup_positive%20primary_RGB.jpg" style="width: 80%; max-width: 550px; height: auto; opacity: 0.55; ">

|

| 31 |

+

<h1 style="font-size: 28px; margin-bottom: 2px; opacity: 0.55;">Meta llama3</h1>

|

| 32 |

+

<p style="font-size: 18px; margin-bottom: 2px; opacity: 0.65;">Ask me anything...</p>

|

| 33 |

+

</div>

|

| 34 |

+

"""

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

css = """

|

| 38 |

+

h1 {

|

| 39 |

+

text-align: center;

|

| 40 |

+

display: block;

|

| 41 |

+

}

|

| 42 |

+

#duplicate-button {

|

| 43 |

+

margin: auto;

|

| 44 |

+

color: white;

|

| 45 |

+

background: #1565c0;

|

| 46 |

+

border-radius: 100vh;

|

| 47 |

+

}

|

| 48 |

+

"""

|

| 49 |

+

|

| 50 |

+

# Load the tokenizer and model

|

| 51 |

+

model_name = "gradientai/Llama-3-8B-Instruct-262k"

|

| 52 |

+

tokenizer = AutoTokenizer.from_pretrained(model_name)

|

| 53 |

+

model = AutoModelForCausalLM.from_pretrained(model_name, device_map="auto") # to("cuda:0")

|

| 54 |

+

minference_patch = MInference("minference", model_name)

|

| 55 |

+

model = minference_patch(model)

|

| 56 |

+

|

| 57 |

+

terminators = [

|

| 58 |

+

tokenizer.eos_token_id,

|

| 59 |

+

tokenizer.convert_tokens_to_ids("<|eot_id|>")

|

| 60 |

+

]

|

| 61 |

+

|

| 62 |

+

@spaces.GPU(duration=120)

|

| 63 |

+

def chat_llama3_8b(message: str,

|

| 64 |

+

history: list,

|

| 65 |

+

temperature: float,

|

| 66 |

+

max_new_tokens: int

|

| 67 |

+

) -> str:

|

| 68 |

+

"""

|

| 69 |

+

Generate a streaming response using the llama3-8b model.

|

| 70 |

+

Args:

|

| 71 |

+

message (str): The input message.

|

| 72 |

+

history (list): The conversation history used by ChatInterface.

|

| 73 |

+

temperature (float): The temperature for generating the response.

|

| 74 |

+

max_new_tokens (int): The maximum number of new tokens to generate.

|

| 75 |

+

Returns:

|

| 76 |

+

str: The generated response.

|

| 77 |

+

"""

|

| 78 |

+

conversation = []

|

| 79 |

+

for user, assistant in history:

|

| 80 |

+

conversation.extend([{"role": "user", "content": user}, {"role": "assistant", "content": assistant}])

|

| 81 |

+

conversation.append({"role": "user", "content": message})

|

| 82 |

+

|

| 83 |

+

input_ids = tokenizer.apply_chat_template(conversation, return_tensors="pt").to(model.device)

|

| 84 |

+

|

| 85 |

+

streamer = TextIteratorStreamer(tokenizer, timeout=10.0, skip_prompt=True, skip_special_tokens=True)

|

| 86 |

+

|

| 87 |

+

generate_kwargs = dict(

|

| 88 |

+

input_ids= input_ids,

|

| 89 |

+

streamer=streamer,

|

| 90 |

+

max_new_tokens=max_new_tokens,

|

| 91 |

+

do_sample=True,

|

| 92 |

+

temperature=temperature,

|

| 93 |

+

eos_token_id=terminators,

|

| 94 |

+

)

|

| 95 |

+

# This will enforce greedy generation (do_sample=False) when the temperature is passed 0, avoiding the crash.

|

| 96 |

+

if temperature == 0:

|

| 97 |

+

generate_kwargs['do_sample'] = False

|

| 98 |

+

|

| 99 |

+

t = Thread(target=model.generate, kwargs=generate_kwargs)

|

| 100 |

+

t.start()

|

| 101 |

+

|

| 102 |

+

outputs = []

|

| 103 |

+

for text in streamer:

|

| 104 |

+

outputs.append(text)

|

| 105 |

+

#print(outputs)

|

| 106 |

+

yield "".join(outputs)

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

# Gradio block

|

| 110 |

+

chatbot=gr.Chatbot(height=450, placeholder=PLACEHOLDER, label='Gradio ChatInterface')

|

| 111 |

+

|

| 112 |

+

with gr.Blocks(fill_height=True, css=css) as demo:

|

| 113 |

+

|

| 114 |

+

gr.Markdown(DESCRIPTION)

|

| 115 |

+

gr.DuplicateButton(value="Duplicate Space for private use", elem_id="duplicate-button")

|

| 116 |

+

gr.ChatInterface(

|

| 117 |

+

fn=chat_llama3_8b,

|

| 118 |

+

chatbot=chatbot,

|

| 119 |

+

fill_height=True,

|

| 120 |

+

additional_inputs_accordion=gr.Accordion(label="⚙️ Parameters", open=False, render=False),

|

| 121 |

+

additional_inputs=[

|

| 122 |

+

gr.Slider(minimum=0,

|

| 123 |

+

maximum=1,

|

| 124 |

+

step=0.1,

|

| 125 |

+

value=0.95,

|

| 126 |

+

label="Temperature",

|

| 127 |

+

render=False),

|

| 128 |

+

gr.Slider(minimum=128,

|

| 129 |

+

maximum=4096,

|

| 130 |

+

step=1,

|

| 131 |

+

value=512,

|

| 132 |

+

label="Max new tokens",

|

| 133 |

+

render=False ),

|

| 134 |

+

],

|

| 135 |

+

examples=[

|

| 136 |

+

['How to setup a human base on Mars? Give short answer.'],

|

| 137 |

+

['Explain theory of relativity to me like I’m 8 years old.'],

|

| 138 |

+

['What is 9,000 * 9,000?'],

|

| 139 |

+

['Write a pun-filled happy birthday message to my friend Alex.'],

|

| 140 |

+

['Justify why a penguin might make a good king of the jungle.']

|

| 141 |

+

],

|

| 142 |

+

cache_examples=False,

|

| 143 |

+

)

|

| 144 |

+

|

| 145 |

+

gr.Markdown(LICENSE)

|

| 146 |

+

|

| 147 |

+

if __name__ == "__main__":

|

| 148 |

+

demo.launch()

|

images/MInference1_onepage.png

ADDED

|

images/MInference_logo.png

ADDED

|

images/benchmarks/needle_viz_LLaMA-3-8B-1M_ours_1K_1000K.png

ADDED

|

images/benchmarks/ppl-LLaMA-3-262k.png

ADDED

|

minference/__init__.py

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) 2024 Microsoft

|

| 2 |

+

# Licensed under The MIT License [see LICENSE for details]

|

| 3 |

+

# flake8: noqa

|

| 4 |

+

from .minference_configuration import MInferenceConfig

|

| 5 |

+

from .models_patch import MInference

|

| 6 |

+

from .ops.block_sparse_flash_attention import block_sparse_attention

|

| 7 |

+

from .ops.pit_sparse_flash_attention_v2 import vertical_slash_sparse_attention

|

| 8 |

+

from .ops.streaming_kernel import streaming_forward

|

| 9 |

+

from .patch import (

|

| 10 |

+

minference_patch,

|

| 11 |

+

minference_patch_kv_cache_cpu,

|

| 12 |

+

minference_patch_with_snapkv,

|

| 13 |

+

patch_hf,

|

| 14 |

+

)

|

| 15 |

+

from .version import VERSION as __version__

|

| 16 |

+

|

| 17 |

+

__all__ = [

|

| 18 |

+

"MInference",

|

| 19 |

+

"MInferenceConfig",

|

| 20 |

+

"minference_patch",

|

| 21 |

+

"minference_patch_kv_cache_cpu",

|

| 22 |

+

"minference_patch_with_snapkv",

|

| 23 |

+

"patch_hf",

|

| 24 |

+

"vertical_slash_sparse_attention",

|

| 25 |

+

"block_sparse_attention",

|

| 26 |

+

"streaming_forward",

|

| 27 |

+

]

|

minference/configs/Llama_3_8B_Instruct_262k_kv_out_v32_fit_o_best_pattern.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+