ALLaVA: Harnessing GPT4V-synthesized Data for A Lite Vision-Language Model

⚡ALLaVA is a project that provides a large-scale GPT4V-synthesized dataset for training LVLMs.⚡

📃 Paper • 🌐 Demo • 👨🏻💻 Github

🤗 ALLaVA-Phi3-mini-128k • 🤗 ALLaVA-StableLM2-1_6B • 🤗 ALLaVA-Phi2-2_7B

Benchmark Result

Our models ALLaVA-Phi3-mini-128k, ALLaVA-StableLM2-1_6B and ALLaVA-Phi2-2_7B achieve competitive results on 17 benchmarks.

| Models | Vicuna-80 | GQA | HallusionBench | MME-P | MMVP | TouchStone | TextVQA | MME-C | MathVista | MM-Vet | MMMU-val | SQA (img) | LLaVA (In-the-Wild) | MLLM-Bench | MMB-en | MMB-cn | SEEDBench (img, v1) |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Large VLMs | |||||||||||||||||

| BLIP-2 | - | - | - | - | - | - | - | - | - | 22.4 | 34.4 | - | - | 3.0* | - | - | 49.7 |

| InstructBLIP | - | 49.5 | - | - | - | - | - | - | - | 25.6 | - | - | 58.2 | - | 44.0 | - | - |

| Qwen-VL-Chat | - | 57.5 | - | 1487.6 | - | - | 61.5 | 360.7 | - | 31.1 | - | 68.2 | - | - | 60.6 | 56.7 | 65.4 |

| LLaVA-1.5-7B | 13.8* | 62.0 | 36.6* | 1504.4* | 24.7* | 594.9* | 58.2 | 324.6* | 25.0* | 31.1 | 35.1* | 66.8 | 65.4 | 23.0* | 64.3 | 58.3 | 66.1 |

| LLaVA-1.5-13B | 22.5 | 63.3 | 36.5* | 1531.3 | 38.0* | 617.7* | 61.3 | 295.4 | 28.3* | 35.4 | 34.4* | 71.6 | 72.5 | - | 67.7 | 63.6 | 68.2 |

| LVIS-7B | - | 62.6 | - | - | - | - | 58.7 | - | - | 31.5 | - | - | 67.0 | 29.0* | 66.2 | - | - |

| LVIS-13B | - | 63.6* | - | - | - | - | 62.5* | - | - | 37.4* | - | - | 71.3* | - | 68.0* | - | - |

| ShareGPT4V-7B | 13.8* | 63.3 | 36.0* | 1540.1* | 34.0* | 637.2* | 60.4 | 346.1* | 24.7* | 37.6 | 35.4* | 68.4* | 72.6 | 30.2* | 68.8 | 61.0* | 69.7 |

| ShareGPT4V-13B | 17.5* | 64.8 | 39.0* | 1576.1* | 35.3* | 648.7* | 62.2 | 309.3* | 28.8* | 43.1 | 35.6* | 70.0* | 79.9 | 35.5* | 71.2 | 61.7* | 70.8 |

| 4B-scale Lite VLMs | |||||||||||||||||

| MobileVLM-v2 | 5.0* | 61.1 | 30.8* | 1440.5 | 18.7* | 541.0* | 57.5 | 261.8* | 28.3* | 26.1* | 30.8* | 70.0 | 53.2* | 15.7* | 63.2 | 43.2* | 64.5* |

| Mipha-3B | 16.2* | 63.9 | 34.3* | 1488.9 | 32.0* | 619.0* | 56.6 | 285.0* | 27.8* | 33.5* | 35.8* | 70.9 | 64.7* | 23.1* | 69.7 | 42.9* | 71.2* |

| TinyLLaVA | 15.6* | 62.1 | 37.2* | 1465.5* | 33.3* | 663.5* | 60.3 | 281.1* | 30.3* | 37.5 | 38.4 | 73.0 | 70.8* | 29.8* | 69.7* | 42.8* | 70.4* |

| Ours | |||||||||||||||||

| ALLaVA-Phi2 | 49.4 | 48.8 | 24.8 | 1316.2 | 36.0 | 632.0 | 49.5 | 301.8 | 27.4 | 32.2 | 35.3 | 67.6 | 69.4 | 43.6 | 64.0 | 40.8 | 65.2 |

| ALLaVA-StableLM2 | 38.8 | 49.8 | 25.3 | 1311.7 | 34.0 | 655.2 | 51.7 | 257.9 | 27.7 | 31.7 | 33.3 | 64.7 | 72.0 | 39.3 | 64.6 | 49.8 | 65.7 |

| ALLaVA-Phi3 | 56.9 | 52.2 | 48.1 | 1382.3 | 32.7 | 667.8 | 53.0 | 347.1 | 32.9 | 37.8 | 41.1 | 64.0 | 68.5 | 54.8 | 68.1 | 55.3 | 69.0 |

* denotes the results of our evaluation. Bold numbers are the best results among all 4B-scale LVLMs.The detailed information of each benchmark is shown in Table 4 of our technical report.

🏭 Inference

All models can be loaded from 🤗 with .from_pretrained().

Check out the example scripts and make sure you have the same outputs as shown in the scripts.

🏋️♂️ Training

Data

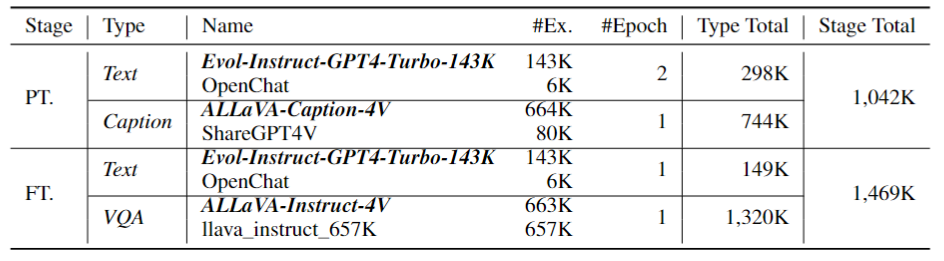

ALLaVA uses 1.0M and 1.5M data for PT. and FT., respectively.

Code

The training code is largely based on LLaVA-v1.5. We wholeheartedly express our gratitude for their invaluable contributions to open-sourcing LVLMs.

Hyperparameters

| Global Batch Size | ZeRO Stage | Optimizer | Max LR | Min LR | Scheduler | Weight decay |

|---|---|---|---|---|---|---|

| 256 (PT) / 128 (FT) | 1 | AdamW | 2e-5 | 2e-6 | CosineAnnealingWarmRestarts | 0 |

The LM backbone, projector are trainable, while the vision encoder is kept frozen. The trainabilities of each module are the same for both stages.

📚 ALLaVA-4V Data

The majority part of training data is ALLaVA-4V. See here to prepare it for training.

🙌 Contributors

Project Leader: Guiming Hardy Chen

Data: Shunian Chen, Junying Chen, Xiangbo Wu

Evaluation: Ruifei Zhang

Deployment: Xiangbo Wu, Zhiyi Zhang

Advising: Zhihong Chen, Benyou Wang

Others: Jianquan Li, Xiang Wan

📝 Citation

If you find our data useful, please consider citing our work! We are FreedomIntelligence from Shenzhen Research Institute of Big Data and The Chinese University of Hong Kong, Shenzhen

@article{chen2024allava,

title={ALLaVA: Harnessing GPT4V-synthesized Data for A Lite Vision-Language Model},

author={Chen, Guiming Hardy and Chen, Shunian and Zhang, Ruifei and Chen, Junying and Wu, Xiangbo and Zhang, Zhiyi and Chen, Zhihong and Li, Jianquan and Wan, Xiang and Wang, Benyou},

journal={arXiv preprint arXiv:2402.11684},

year={2024}

}

- Downloads last month

- 56