Model Details

Zion_Alpha is the first REAL Hebrew model in the world. This version WAS fine tuned for tasks. I did the finetune using SOTA techniques and using my insights from years of underwater basket weaving. If you wanna offer me a job, just add me on Facebook.

Another world record broken by Zion_Alpha!

On June 10th, 2024, this model achieved the highest sentiment analysis score in the world for Hebrew LLMs, with an impressive 70.3, surpassing even a 35B model that's five times its size!

Future Plans

My previous LLM, Zion_Alpha, set a world record on Hugging Face by achieving the highest SNLI score for Hebrew open LLMs at 84.05. The current model, a SLERP merge, achieved a lower SNLI score but still surprised everyone by securing the highest sentiment analysis score of 70.3. This demonstrates significant untapped potential in optimizing the training process, showing that 7B models can deliver far more performance in Hebrew than previously thought possible. This will be my last Hebrew model for a while, as I have other adventures to pursue.

Looking for Sponsors

Since all my work is done on-premises, I am constrained by my current hardware. I would greatly appreciate any support in acquiring an A6000, which would enable me to train significantly larger models much faster.

Papers?

Maybe. We'll see. No promises here 🤓

Contact Details

I'm not great at self-marketing (to say the least) and don't have any social media accounts. If you'd like to reach out to me, you can email me at [email protected]. Please note that this email might receive more messages than I can handle, so I apologize in advance if I can't respond to everyone.

Versions and QUANTS

Model architecture

Based on Mistral 7B. I didn't even bother to alter the tokenizer.

The recommended prompt setting is Debug-deterministic:

temperature: 1

top_p: 1

top_k: 1

typical_p: 1

min_p: 1

repetition_penalty: 1

The recommended instruction template is Mistral:

{%- for message in messages %}

{%- if message['role'] == 'system' -%}

{{- message['content'] -}}

{%- else -%}

{%- if message['role'] == 'user' -%}

{{-'[INST] ' + message['content'].rstrip() + ' [/INST]'-}}

{%- else -%}

{{-'' + message['content'] + '</s>' -}}

{%- endif -%}

{%- endif -%}

{%- endfor -%}

{%- if add_generation_prompt -%}

{{-''-}}

{%- endif -%}

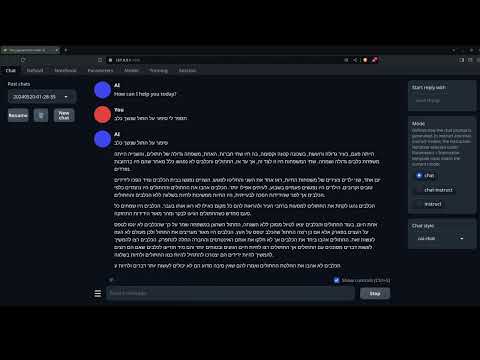

English to hebrew example:

English to hebrew example:

History

The model was originally trained about 2 month after Mistral (v0.1) was released.

As of 04 June 2024, Zion_Alpha got the Highest SNLI score in the world among open source models in Hebrew, surpassing most of the models by a huge margin. (84.05 score)

Support

- My Ko-fi page ALL donations will go for research resources and compute, every bit counts 🙏🏻

- My Patreon ALL donations will go for research resources and compute, every bit counts 🙏🏻

- Downloads last month

- 2,814