Intruduction

We introduce Xmodel-VLM, a cutting-edge multimodal vision language model. It is designed for efficient deployment on consumer GPU servers. Our work directly confronts a pivotal industry issue by grappling with the prohibitive service costs that hinder the broad adoption of large-scale multimodal systems.

Refer to our paper and github for more details!

To use Xmodel_VLM for the inference, all you need to do is to input a few lines of codes as demonstrated below. However, please make sure that you are using the latest code and related virtual environments.

Inference example

import sys

import torch

import argparse

from PIL import Image

from pathlib import Path

import time

sys.path.append(str(Path(__file__).parent.parent.resolve()))

from xmodelvlm.model.xmodelvlm import load_pretrained_model

from xmodelvlm.conversation import conv_templates, SeparatorStyle

from xmodelvlm.utils import disable_torch_init, process_images, tokenizer_image_token, KeywordsStoppingCriteria

from xmodelvlm.constants import IMAGE_TOKEN_INDEX, DEFAULT_IMAGE_TOKEN

def inference_once(args):

disable_torch_init()

model_name = args.model_path.split('/')[-1]

tokenizer, model, image_processor, context_len = load_pretrained_model(args.model_path, args.load_8bit, args.load_4bit)

images = [Image.open(args.image_file).convert("RGB")]

images_tensor = process_images(images, image_processor, model.config).to(model.device, dtype=torch.float16)

conv = conv_templates[args.conv_mode].copy()

conv.append_message(conv.roles[0], DEFAULT_IMAGE_TOKEN + "\n" + args.prompt)

conv.append_message(conv.roles[1], None)

prompt = conv.get_prompt()

stop_str = conv.sep if conv.sep_style != SeparatorStyle.TWO else conv.sep2

# Input

input_ids = (tokenizer_image_token(prompt, tokenizer, IMAGE_TOKEN_INDEX, return_tensors="pt").unsqueeze(0).cuda())

stopping_criteria = KeywordsStoppingCriteria([stop_str], tokenizer, input_ids)

# Inference

with torch.inference_mode():

start_time = time.time()

output_ids = model.generate(

input_ids,

images=images_tensor,

do_sample=True if args.temperature > 0 else False,

temperature=args.temperature,

top_p=args.top_p,

num_beams=args.num_beams,

max_new_tokens=args.max_new_tokens,

use_cache=True,

stopping_criteria=[stopping_criteria],

)

end_time = time.time()

execution_time = end_time-start_time

print("the execution time (secend): ", execution_time)

# Result-Decode

input_token_len = input_ids.shape[1]

n_diff_input_output = (input_ids != output_ids[:, :input_token_len]).sum().item()

if n_diff_input_output > 0:

print(f"[Warning] {n_diff_input_output} output_ids are not the same as the input_ids")

outputs = tokenizer.batch_decode(output_ids[:, input_token_len:], skip_special_tokens=True)[0]

outputs = outputs.strip()

if outputs.endswith(stop_str):

outputs = outputs[: -len(stop_str)]

print(f"🚀 {model_name}: {outputs.strip()}\n")

if __name__ == '__main__':

model_path = "XiaoduoAILab/Xmodel_VLM" # model weight file

image_file = "assets/demo.jpg" # image file

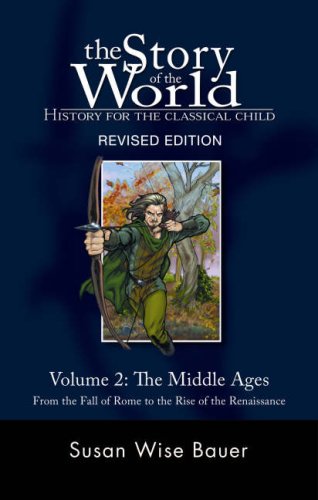

prompt_str = "Who is the author of this book?\nAnswer the question using a single word or phrase."

# (or) What is the title of this book?

# (or) Is this book related to Education & Teaching?

args = type('Args', (), {

"model_path": model_path,

"image_file": image_file,

"prompt": prompt_str,

"conv_mode": "v1",

"temperature": 0,

"top_p": None,

"num_beams": 1,

"max_new_tokens": 512,

"load_8bit": False,

"load_4bit": False,

})()

inference_once(args)

Prompt: Who is the author of this book?\nAnswer the question using a single word or phrase.

Author: Susan Wise Bauer

Author: Susan Wise Bauer

Evaluation

We evaluate the multimodal performance across a variety of datasets: VizWiz, SQAI, VQAT, POPE, GQA, MMB, MMBCN , MM-Vet, and MME. Our analysis, as depicted In the following table.

| Method | LLM | Res. | VizWiz | SQA | VQA | POPE | GQA | MMB | MMBCN | MM-Vet | MME |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Openflamingo | MPT-7B | 336 | - | - | 33.6 | - | - | 4.6 | - | - | - |

| BLIP-2 | Vicuna-13B | 224 | - | 61.0 | 42.5 | 85.3 | 41.0 | - | - | - | 1293.8 |

| MiniGPT-4 | Vicuna-7B | 224 | - | - | - | - | 32.2 | 23.0 | - | - | 581.7 |

| InstructBLIP | Vicuna-7B | 224 | - | 60.5 | 50.1 | - | 49.2 | - | - | - | - |

| InstructBLIP | Vicuna-13B | 224 | - | 63.1 | 50.7 | 78.9 | 49.5 | - | - | - | 1212.8 |

| Shikra | Vicuna-13B | 224 | - | - | - | - | - | 58.8 | - | - | - |

| Qwen-VL | Qwen-7B | 448 | - | 67.1 | 63.8 | - | 59.3 | 38.2 | - | - | 1487.6 |

| MiniGPT-v2 | LLaMA-7B | 448 | - | - | - | - | 60.3 | 12.2 | - | - | - |

| LLaVA-v1.5-13B | Vicuna-13B | 336 | 53.6 | 71.6 | 61.3 | 85.9 | 63.3 | 67.7 | 63.6 | 35.4 | 1531.3 |

| MobileVLM 1.7 | MobileLLaMA 1.4B | 336 | 26.3 | 54.7 | 41.5 | 84.5 | 56.1 | 53.2 | 16.67 | 21.7 | 1196.2 |

| Xmodel-VLM | Xmodel-LM 1.1B | 336 | 41.7 | 53.3 | 39.9 | 85.9 | 58.3 | 52.0 | 45.7 | 21.8 | 1250.7 |

- Downloads last month

- 70