The viewer is disabled because this dataset repo requires arbitrary Python code execution. Please consider

removing the

loading script

and relying on

automated data support

(you can use

convert_to_parquet

from the datasets library). If this is not possible, please

open a discussion

for direct help.

Dataset Card for CivilComments WILDS

Dataset Summary

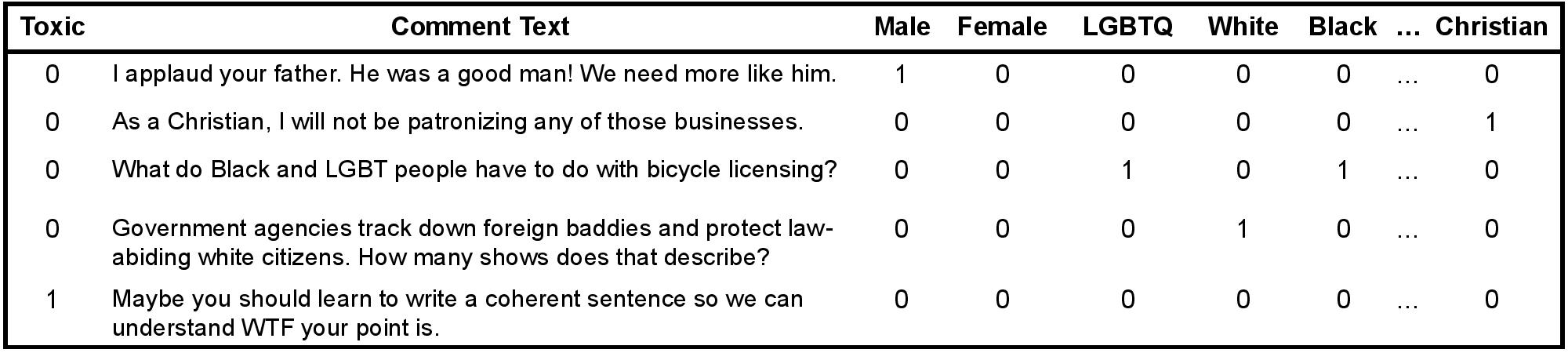

Automatic review of user-generated text—e.g., detecting toxic comments—is an important tool for moderating the sheer volume of text written on the Internet. Unfortunately, prior work has shown that such toxicity classifiers pick up on biases in the training data and spuriously associate toxicity with the mention of certain demographics (Park et al., 2018; Dixon et al., 2018). These types of spurious correlations can significantly degrade model performance on particular subpopulations (Sagawa et al.,2020).

Supported Tasks and Leaderboards

[More Information Needed]

Languages

English

Dataset Structure

Data Instances

[More Information Needed]

Data Fields

[More Information Needed]

Data Splits

[More Information Needed]

Dataset Creation

Curation Rationale

[More Information Needed]

Source Data

Initial Data Collection and Normalization

[More Information Needed]

Who are the source language producers?

[More Information Needed]

Annotations

Annotation process

[More Information Needed]

Who are the annotators?

[More Information Needed]

Personal and Sensitive Information

[More Information Needed]

Considerations for Using the Data

Social Impact of Dataset

[More Information Needed]

Discussion of Biases

[More Information Needed]

Other Known Limitations

[More Information Needed]

Additional Information

Dataset Curators

[More Information Needed]

Licensing Information

This dataset is in the public domain and is distributed under CC0.

Citation Information

@inproceedings{wilds2021, title = {{WILDS}: A Benchmark of in-the-Wild Distribution Shifts}, author = {Pang Wei Koh and Shiori Sagawa and Henrik Marklund and Sang Michael Xie and Marvin Zhang and Akshay Balsubramani and Weihua Hu and Michihiro Yasunaga and Richard Lanas Phillips and Irena Gao and Tony Lee and Etienne David and Ian Stavness and Wei Guo and Berton A. Earnshaw and Imran S. Haque and Sara Beery and Jure Leskovec and Anshul Kundaje and Emma Pierson and Sergey Levine and Chelsea Finn and Percy Liang}, booktitle = {International Conference on Machine Learning (ICML)}, year = {2021} }

@inproceedings{borkan2019nuanced, title={Nuanced metrics for measuring unintended bias with real data for text classification}, author={Borkan, Daniel and Dixon, Lucas and Sorensen, Jeffrey and Thain, Nithum and Vasserman, Lucy}, booktitle={Companion Proceedings of The 2019 World Wide Web Conference}, pages={491--500}, year={2019} }

@article{DBLP:journals/corr/abs-2211-09110, author = {Percy Liang and Rishi Bommasani and Tony Lee and Dimitris Tsipras and Dilara Soylu and Michihiro Yasunaga and Yian Zhang and Deepak Narayanan and Yuhuai Wu and Ananya Kumar and Benjamin Newman and Binhang Yuan and Bobby Yan and Ce Zhang and Christian Cosgrove and Christopher D. Manning and Christopher R{'{e}} and Diana Acosta{-}Navas and Drew A. Hudson and Eric Zelikman and Esin Durmus and Faisal Ladhak and Frieda Rong and Hongyu Ren and Huaxiu Yao and Jue Wang and Keshav Santhanam and Laurel J. Orr and Lucia Zheng and Mert Y{"{u}}ksekg{"{o}}n{"{u}}l and Mirac Suzgun and Nathan Kim and Neel Guha and Niladri S. Chatterji and Omar Khattab and Peter Henderson and Qian Huang and Ryan Chi and Sang Michael Xie and Shibani Santurkar and Surya Ganguli and Tatsunori Hashimoto and Thomas Icard and Tianyi Zhang and Vishrav Chaudhary and William Wang and Xuechen Li and Yifan Mai and Yuhui Zhang and Yuta Koreeda}, title = {Holistic Evaluation of Language Models}, journal = {CoRR}, volume = {abs/2211.09110}, year = {2022}, url = {https://doi.org/10.48550/arXiv.2211.09110}, doi = {10.48550/arXiv.2211.09110}, eprinttype = {arXiv}, eprint = {2211.09110}, timestamp = {Wed, 23 Nov 2022 18:03:56 +0100}, biburl = {https://dblp.org/rec/journals/corr/abs-2211-09110.bib}, bibsource = {dblp computer science bibliography, https://dblp.org} }

Contributions

[More Information Needed]

- Downloads last month

- 52