metadata

license: mit

library_name: sklearn

tags:

- sklearn

- skops

- text-classification

model_format: pickle

model_file: skops-ngrzbpwh.pkl

Model description

This is a Support Vector Classifier model trained on JeVeuxAider dataset. As input, the model takes text embeddings encoded with camembert-base (768 tokens)

Intended uses & limitations

This model is not ready to be used in production.

Training Procedure

[More Information Needed]

Hyperparameters

Click to expand

| Hyperparameter | Value |

|---|---|

| memory | |

| steps | [('columntransformer', ColumnTransformer(transformers=[('num', Pipeline(steps=[('imputer', SimpleImputer(strategy='median')), ('scaler', StandardScaler()), ('pca', PCA(n_components=689))]), Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8', 'avg_9', 'avg_10', ... 'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764', 'max_765', 'max_766', 'max_767', 'max_768'], dtype='object', length=2304))], verbose_feature_names_out=False)), ('randomforestclassifier', RandomForestClassifier(max_depth=15, max_features=20, min_samples_split=10, random_state=42))] |

| verbose | False |

| columntransformer | ColumnTransformer(transformers=[('num', Pipeline(steps=[('imputer', SimpleImputer(strategy='median')), ('scaler', StandardScaler()), ('pca', PCA(n_components=689))]), Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8', 'avg_9', 'avg_10', ... 'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764', 'max_765', 'max_766', 'max_767', 'max_768'], dtype='object', length=2304))], verbose_feature_names_out=False) |

| randomforestclassifier | RandomForestClassifier(max_depth=15, max_features=20, min_samples_split=10, random_state=42) |

| columntransformer__n_jobs | |

| columntransformer__remainder | drop |

| columntransformer__sparse_threshold | 0.3 |

| columntransformer__transformer_weights | |

| columntransformer__transformers | [('num', Pipeline(steps=[('imputer', SimpleImputer(strategy='median')), ('scaler', StandardScaler()), ('pca', PCA(n_components=689))]), Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8', 'avg_9', 'avg_10', ... 'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764', 'max_765', 'max_766', 'max_767', 'max_768'], dtype='object', length=2304))] |

| columntransformer__verbose | False |

| columntransformer__verbose_feature_names_out | False |

| columntransformer__num | Pipeline(steps=[('imputer', SimpleImputer(strategy='median')), ('scaler', StandardScaler()), ('pca', PCA(n_components=689))]) |

| columntransformer__num__memory | |

| columntransformer__num__steps | [('imputer', SimpleImputer(strategy='median')), ('scaler', StandardScaler()), ('pca', PCA(n_components=689))] |

| columntransformer__num__verbose | False |

| columntransformer__num__imputer | SimpleImputer(strategy='median') |

| columntransformer__num__scaler | StandardScaler() |

| columntransformer__num__pca | PCA(n_components=689) |

| columntransformer__num__imputer__add_indicator | False |

| columntransformer__num__imputer__copy | True |

| columntransformer__num__imputer__fill_value | |

| columntransformer__num__imputer__keep_empty_features | False |

| columntransformer__num__imputer__missing_values | nan |

| columntransformer__num__imputer__strategy | median |

| columntransformer__num__imputer__verbose | deprecated |

| columntransformer__num__scaler__copy | True |

| columntransformer__num__scaler__with_mean | True |

| columntransformer__num__scaler__with_std | True |

| columntransformer__num__pca__copy | True |

| columntransformer__num__pca__iterated_power | auto |

| columntransformer__num__pca__n_components | 689 |

| columntransformer__num__pca__n_oversamples | 10 |

| columntransformer__num__pca__power_iteration_normalizer | auto |

| columntransformer__num__pca__random_state | |

| columntransformer__num__pca__svd_solver | auto |

| columntransformer__num__pca__tol | 0.0 |

| columntransformer__num__pca__whiten | False |

| randomforestclassifier__bootstrap | True |

| randomforestclassifier__ccp_alpha | 0.0 |

| randomforestclassifier__class_weight | |

| randomforestclassifier__criterion | gini |

| randomforestclassifier__max_depth | 15 |

| randomforestclassifier__max_features | 20 |

| randomforestclassifier__max_leaf_nodes | |

| randomforestclassifier__max_samples | |

| randomforestclassifier__min_impurity_decrease | 0.0 |

| randomforestclassifier__min_samples_leaf | 1 |

| randomforestclassifier__min_samples_split | 10 |

| randomforestclassifier__min_weight_fraction_leaf | 0.0 |

| randomforestclassifier__n_estimators | 100 |

| randomforestclassifier__n_jobs | |

| randomforestclassifier__oob_score | False |

| randomforestclassifier__random_state | 42 |

| randomforestclassifier__verbose | 0 |

| randomforestclassifier__warm_start | False |

Model Plot

Pipeline(steps=[('columntransformer',ColumnTransformer(transformers=[('num',Pipeline(steps=[('imputer',SimpleImputer(strategy='median')),('scaler',StandardScaler()),('pca',PCA(n_components=689))]),Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8','avg_9', 'avg_10',...'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764','max_765', 'max_766', 'max_767', 'max_768'],dtype='object', length=2304))],verbose_feature_names_out=False)),('randomforestclassifier',RandomForestClassifier(max_depth=15, max_features=20,min_samples_split=10,random_state=42))])In a Jupyter environment, please rerun this cell to show the HTML representation or trust the notebook. On GitHub, the HTML representation is unable to render, please try loading this page with nbviewer.org.

Pipeline(steps=[('columntransformer',ColumnTransformer(transformers=[('num',Pipeline(steps=[('imputer',SimpleImputer(strategy='median')),('scaler',StandardScaler()),('pca',PCA(n_components=689))]),Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8','avg_9', 'avg_10',...'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764','max_765', 'max_766', 'max_767', 'max_768'],dtype='object', length=2304))],verbose_feature_names_out=False)),('randomforestclassifier',RandomForestClassifier(max_depth=15, max_features=20,min_samples_split=10,random_state=42))])ColumnTransformer(transformers=[('num',Pipeline(steps=[('imputer',SimpleImputer(strategy='median')),('scaler', StandardScaler()),('pca',PCA(n_components=689))]),Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8','avg_9', 'avg_10',...'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764','max_765', 'max_766', 'max_767', 'max_768'],dtype='object', length=2304))],verbose_feature_names_out=False)Index(['avg_1', 'avg_2', 'avg_3', 'avg_4', 'avg_5', 'avg_6', 'avg_7', 'avg_8','avg_9', 'avg_10',...'max_759', 'max_760', 'max_761', 'max_762', 'max_763', 'max_764','max_765', 'max_766', 'max_767', 'max_768'],dtype='object', length=2304)

SimpleImputer(strategy='median')

StandardScaler()

PCA(n_components=689)

RandomForestClassifier(max_depth=15, max_features=20, min_samples_split=10,random_state=42)

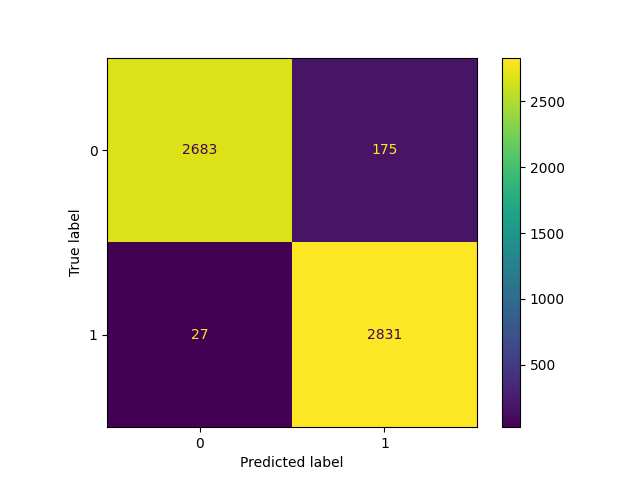

Evaluation Results

| Metric | Value |

|---|---|

| accuracy | 0.964661 |

| f1 score | 0.964637 |

Confusion Matrix

How to Get Started with the Model

[More Information Needed]

Model Card Authors

huynhdoo

Model Card Contact

You can contact the model card authors through following channels: [More Information Needed]

Citation

BibTeX

@inproceedings{...,year={2023}}

get_started_code

import pickle as pickle with open(pkl_filename, 'rb') as file: pipe = pickle.load(file)